Accelerating the Super-Resolution Convolutional Neural Network

Chao Dong, Chen Change Loy, Xiaoou Tang

Department of Information Engineering, The Chinese University of Hong Kong

dongchao@sensetime.com, {ccloy, xtang}@ie.cuhk.edu.hk

Abstract

As a successful deep model applied in image super-resolution (SR), the Super-Resolution Convolutional Neural Network (SRCNN) has demonstrated superior performance to the previous hand-crafted models either in speed and restoration quality. However, the high computational cost still hinders it from practical usage that demands real-time performance (24 fps). In this paper, we aim at accelerating the current SRCNN, and propose a compact hourglass-shape CNN structure for faster and better SR. We re-design the SRCNN structure mainly in three aspects. First, we introduce a deconvolution layer at the end of the network, then the mapping is learned directly from the original low-resolution image (without interpolation) to the high-resolution one. Second, we reformulate the mapping layer by shrinking the input feature dimension before mapping and expanding back afterwards. Third, we adopt smaller filter sizes but more mapping layers. The proposed model achieves a speed up of more than 40 times with even superior restoration quality. Further, we present the parameter settings that can achieve real-time performance on a generic CPU while still maintaining good performance. A corresponding transfer strategy is also proposed for fast training and testing across different upscaling factors.

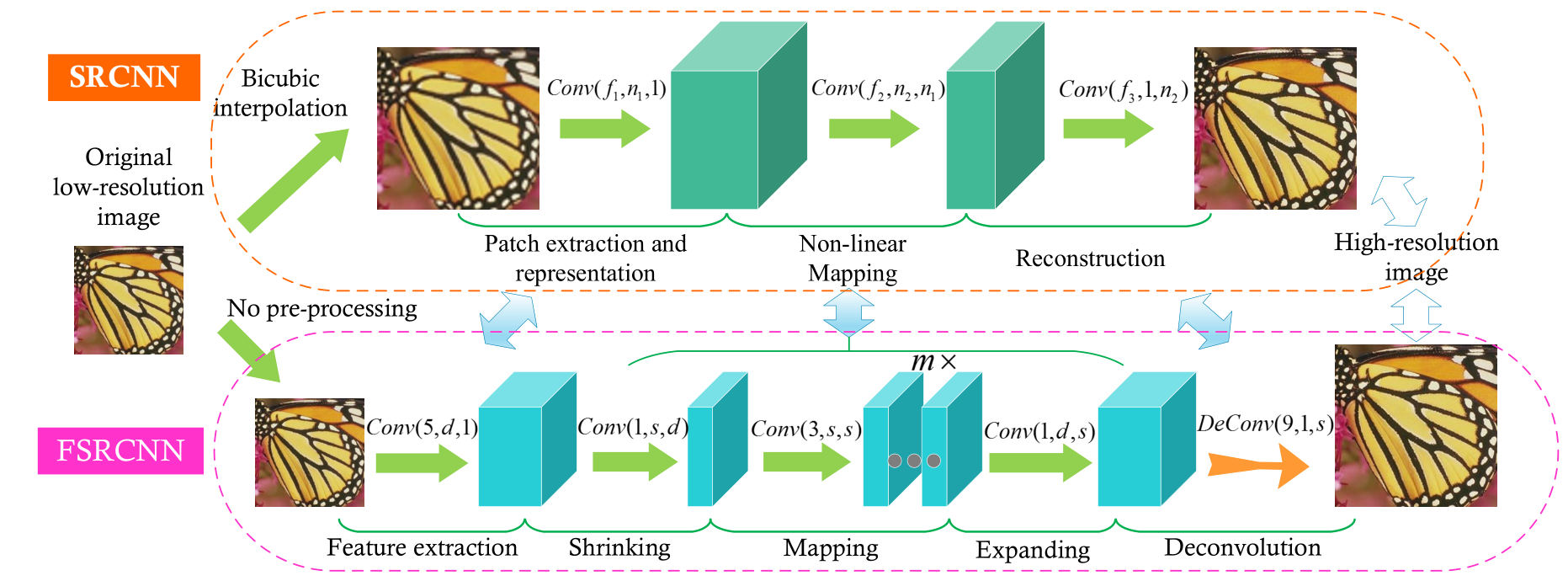

From SRCNN to FSRCNN

This figure shows the network structures of the SRCNN and FSRCNN. The proposed FSRCNN is different from SRCNN mainly in three aspects. First, FSRCNN adopts the original low-resolution image as input without bicubic interpolation. A deconvolution layer is introduced at the end of the network to perform upsampling. Second, The non-linear mapping step in SRCNN is replaced by three steps in FSRCNN, namely the shrinking, mapping, and expanding step. Third, FSRCNN adopts smaller filter sizes and a deeper network structure. These improvements provide FSRCNN with better performance but lower computational cost than SRCNN.

Results

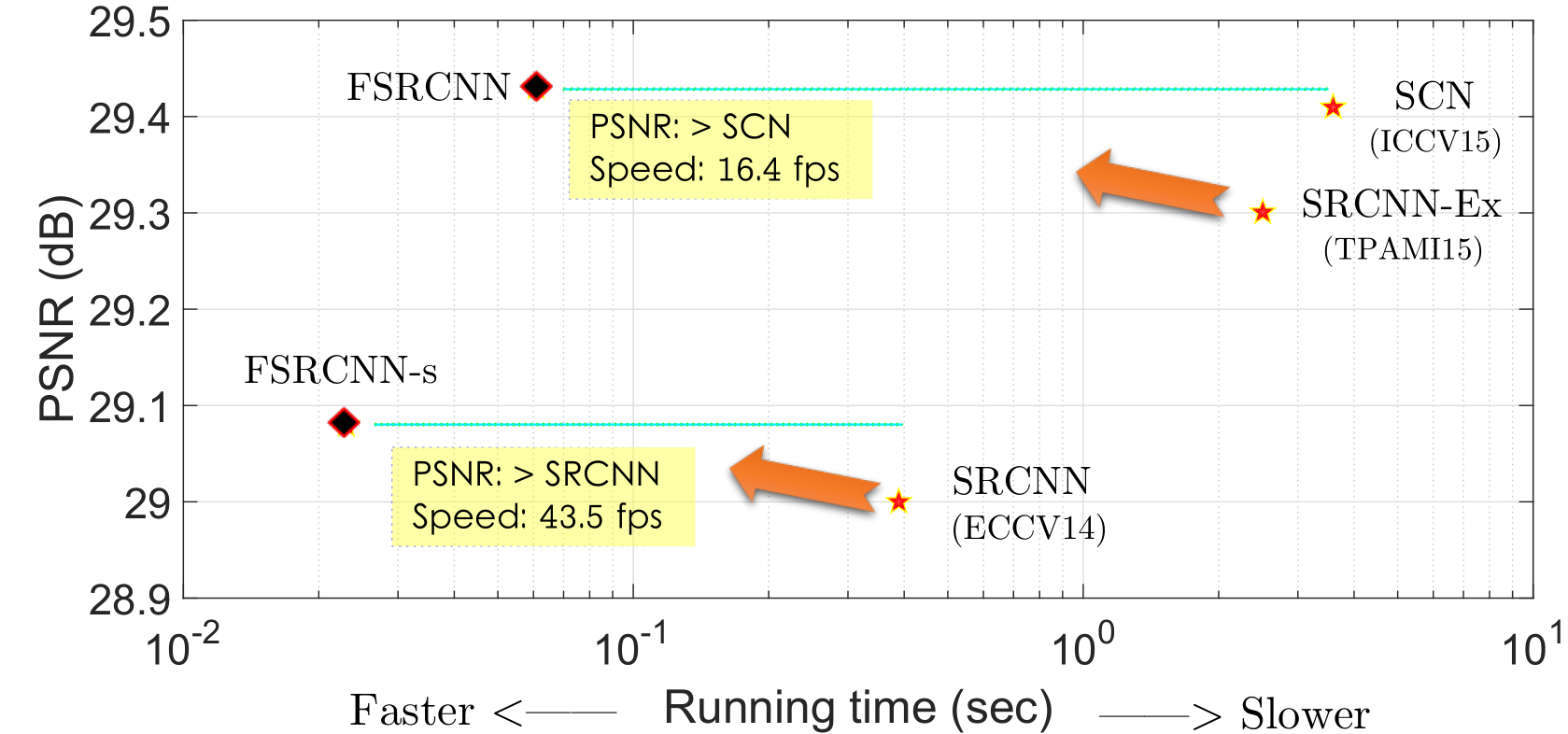

The proposed FSRCNN networks could achieve better super-resolution quality than existing methods, and are tens of times faster. Especially, the FSRCNN-s can be implemented in real-time (>24 fps) on a generic CPU. The chart is based on Set14 results summarized in the following table.

The results of PSNR (dB) and test time (sec on CPU) on three test datasets. We present the best results reported in the corresponding paper. The proposed FSCNN and FSRCNN-s are trained on both 91-image and General-100 dataset. More comparisons with other methods on PSNR, SSIM and IFC can be found in the supplementary file.

test dataset |

scaling factor |

Bicubic PSNR / Time |

SRCNN [1] PSNR / Time |

SRCNN-Ex [2] PSNR / Time |

SCN [3] PSNR / Time |

FSRCNN-S PSNR / Time |

FSRCNN PSNR / Time |

Set5 Set14 B200 |

2 2 3 |

33.66 / - 30.23 / - 29.70 / - |

36.34 / 0.18 32.18 / 0.39 31.38 / 0.23 |

36.66 / 1.3 32.45 / 2.8 31.63 / 1.7 |

36.93 / 0.94 33.10 / 1.7 31.63 / 1.1 |

36.58 / 0.024 32.28 / 0.061 31.48 / 0.033 |

37.00 / 0.068 32.63 / 0.160 31.80 / 0.098 |

Set5 Set14 B200 |

3 3 3 |

30.39 / - 27.54 / - 27.26 / - |

32.39 / 0.18 29.00 / 0.39 28.28 / 0.23 |

32.75 / 1.3 29.30 / 2.8 28.48 / 1.7 |

33.10 / 1.8 29.41 / 3.6 28.54 / 2.4 |

32.61 / 0.010 29.13 / 0.023 28.32 / 0.013 |

33.16 / 0.027 29.43 / 0.061 28.60 / 0.035 |

Set5 Set14 B200 |

4 4 4 |

28.42 / - 26.00 / - 25.97 / - |

30.09 / 0.18 27.20 / 0.39 26.73 / 0.23 |

30.49 / 1.3 27.50 / 2.8 26.92 / 1.7 |

30.86 / 1.2 27.64 / 2.3 27.02 / 1.4 |

30.11 / 0.0052 27.19 / 0.0099 26.75 / 0.0072 |

30.71 / 0.015 27.59 / 0.029 26.98 / 0.019 |

[1] Dong, C., Loy, C.C., He, K., Tang, X.: Learning a deep convolutional network for image super-resolution. In: ECCV. (2014) 184–199.

[2] Dong, C., Loy, C.C., He, K., Tang, X.: Image super-resolution using deep convolutional networks. TPAMI 38(2) (2015) 295–307.

[3] Wang, Z., Liu, D., Yang, J., Han, W., Huang, T.: Deeply improved sparse coding for image super-resolution. ICCV (2015) 370–378.