Yunxuan Zhang2,

Li Liu2,

Cheng Li2, and

Chen Change Loy1

1Department of Information Engineering, The Chinese University of Hong Kong, 2SenseTime Group Limited,

British Machine Vision Conference (BMVC) 2017, London, UK

[PDF]

Abstract

We introduce a novel approach for annotating large quantity of in-the-wild facial images with high-quality posterior age distribution as labels. Each posterior provides a probability distribution of estimated ages for a face. Our approach is motivated by observations that it is easier to distinguish who is the older of two people than to determine the person’s actual age. Given a reference database with samples of known ages and a dataset to label, we can transfer reliable annotations from the former to the latter via human-in-the-loop comparisons. We show an effective way to transform such comparisons to posterior via fully-connected and SoftMax layers, so as to permit end-to-end training in a deep network. Thanks to the efficient and effective annotation approach, we collect a new large-scale facial age dataset, dubbed ‘MegaAge’, which consists of 41, 941 images. With the dataset, we train a network that jointly performs ordinal hyperplane classification and posterior distribution learning. Our approach achieves state-of-the-art results on popular benchmarks such as MORPH2, Adience, and the newly proposed MegaAge.

MegaAge Dataset

We introduce a new large-scale MegaAge dataset that consists of 41,941 faces annotated with age posterior distributions. We also provide the MegaAge-Asian dataset that consists only Asian faces (40,000 face images).

You can download the MegaAge dataset from this link, and MegaAge-Asian dataset from this link.

Note

You need to comply the following agreement to download or use the dataset:

- The MegaAge/MegaAge-Asian database is available for non-commercial research purposes only.

- All images of the MegaAge/MegaAge-Asian database are obtained from the Internet which are not property of MMLAB, The Chinese University of Hong Kong or SenseTime. The MMLAB and SenseTime are not responsible for the content nor the meaning of these images.

- You agree not to reproduce, duplicate, copy, sell, trade, resell or exploit for any commercial purposes, any portion of the images and any portion of derived data.

- You agree not to further copy, publish or distribute any portion of the MegaAge database. Except, for internal use at a single site within the same organization it is allowed to make copies of the database.

- MMLAB and SenseTime reserve the right to terminate your access to the database at any time.

- All submitted papers or any publicly available text using the MegaAge/MegaAge-Asian database must cite the following paper:

Yunxuan Zhang, Li Liu, Cheng Li, and Chen Change Loy. Quantifying Facial Age by Posterior of Age Comparisons, In British Machine Vision Conference (BMVC), 2017.

Methodology

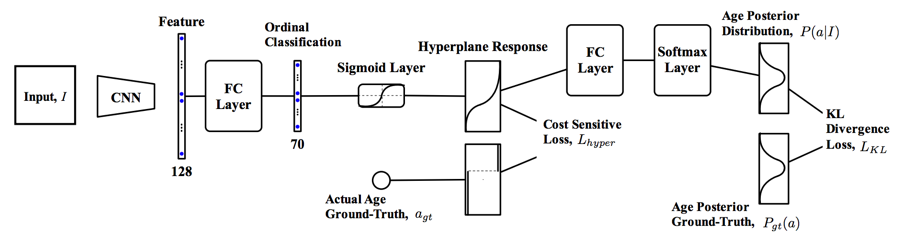

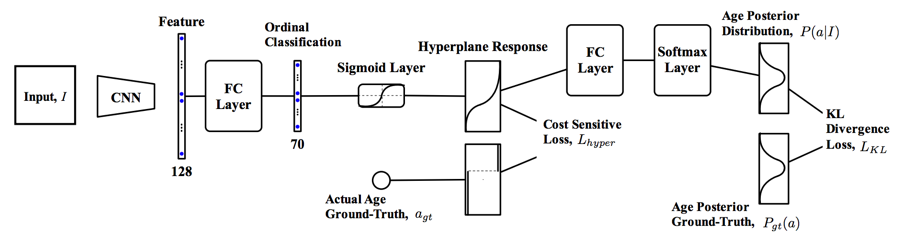

We present a new deep convolutional network architecture that learns from age posterior distribution. Our network consists of a ordinal hyperplane module that captures ordinal information. Different from ordinal hyperplane methods, our network further maps the responses of the module to generate age posterior distribution. We show that the mapping can be accomplished by using a fully-connected layer and a softmax layer. The proposed network is unique in that it can be jointly trained with two losses, namely, the cost sensitive loss that is typically applied for learning an ordinal hyperplanes ranker, and a Kullback-Leibler (KL) divergence loss that supervises the learning of posterior distribution.

Citation

@inproceedings{huang2016unsupervised,

author = {Yunxuan Zhang and Li Liu and Cheng Li and Chen Change Loy},

title = {Quantifying Facial Age by Posterior of Age Comparisons},

booktitle = {British Machine Vision Conference (BMVC)},

year = {2017}}