Pedestrian Color Naming via Convolutional Neural Network

Zhiyi Cheng, Xiaoxiao Li, and Chen Change Loy

Department of Informaiton Engineering, The Chinese University of Hong Kong The 13th Asian Conference on Computer Vision (ACCV'16)

Zhiyi Cheng, Xiaoxiao Li, and Chen Change Loy

Department of Informaiton Engineering, The Chinese University of Hong Kong The 13th Asian Conference on Computer Vision (ACCV'16)

Color serves as an important cue for many computer vision tasks. Nevertheless, obtaining accurate color description from images is non-trivial due to varying illumination conditions, view angles, and surface reflectance. This is especially true for the challenging problem of pedestrian description in public spaces. We made two contributions in this study: (1) We contribute a large-scale pedestrian color naming dataset with 14,213 hand-labeled images. (2) We address the problem of assigning consistent color name to regions of single object’s surface. We propose an end-to-end, pixel-to-pixel convolutional neural network (CNN) for pedestrian color naming. We demonstrate that our Pedestrian Color Naming CNN (PCN-CNN) is superior over existing approaches in providing consistent color names on real-world pedestrian images. In addition, we show the effectiveness of color descriptor extracted from PCN-CNN in complementing existing descriptors for the task of per- son re-identification. Moreover, we discuss a novel application to retrieve outfit matching and fashion (which could be difficult to be described by keywords) with just a user-provided color sketch.

To facilitate the learning of evaluation of pedestrian color naming, we build a new large-scale dataset, named Pedestrian Color Naming (PCN) dataset, which contains 14,213 images, each of which hand-labeled with color label for each pixel. All images in the PCN dataset are obtained from the Market- 1501 dataset [1].

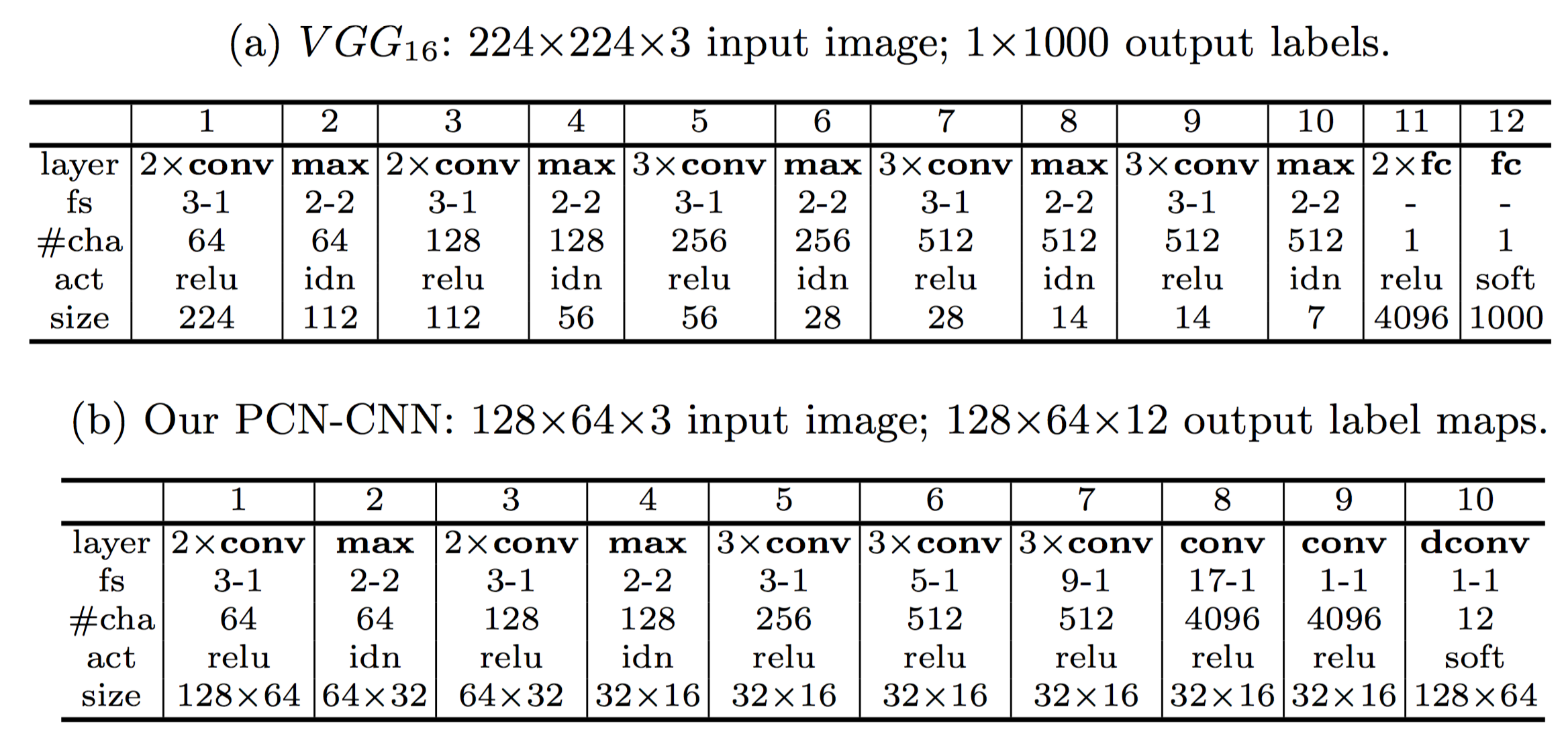

We approach this problem in a general CRF framework with the unary potentials generated by a deep convolutional network. We based our framework architecture on the VGG16 network [2] with modifications to fit in our low-resolution inputs.

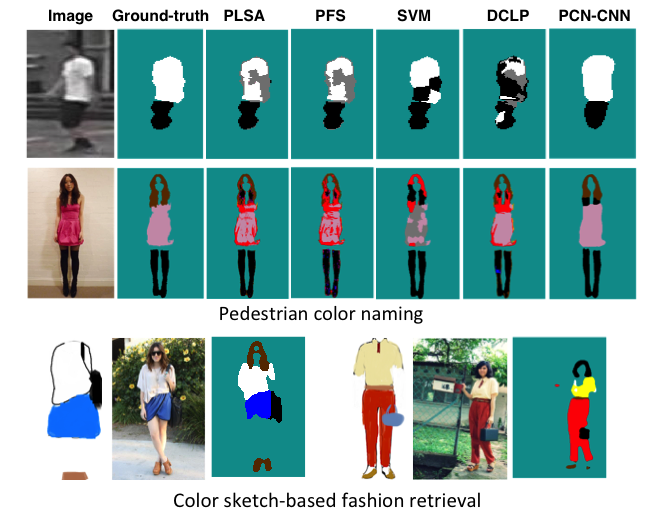

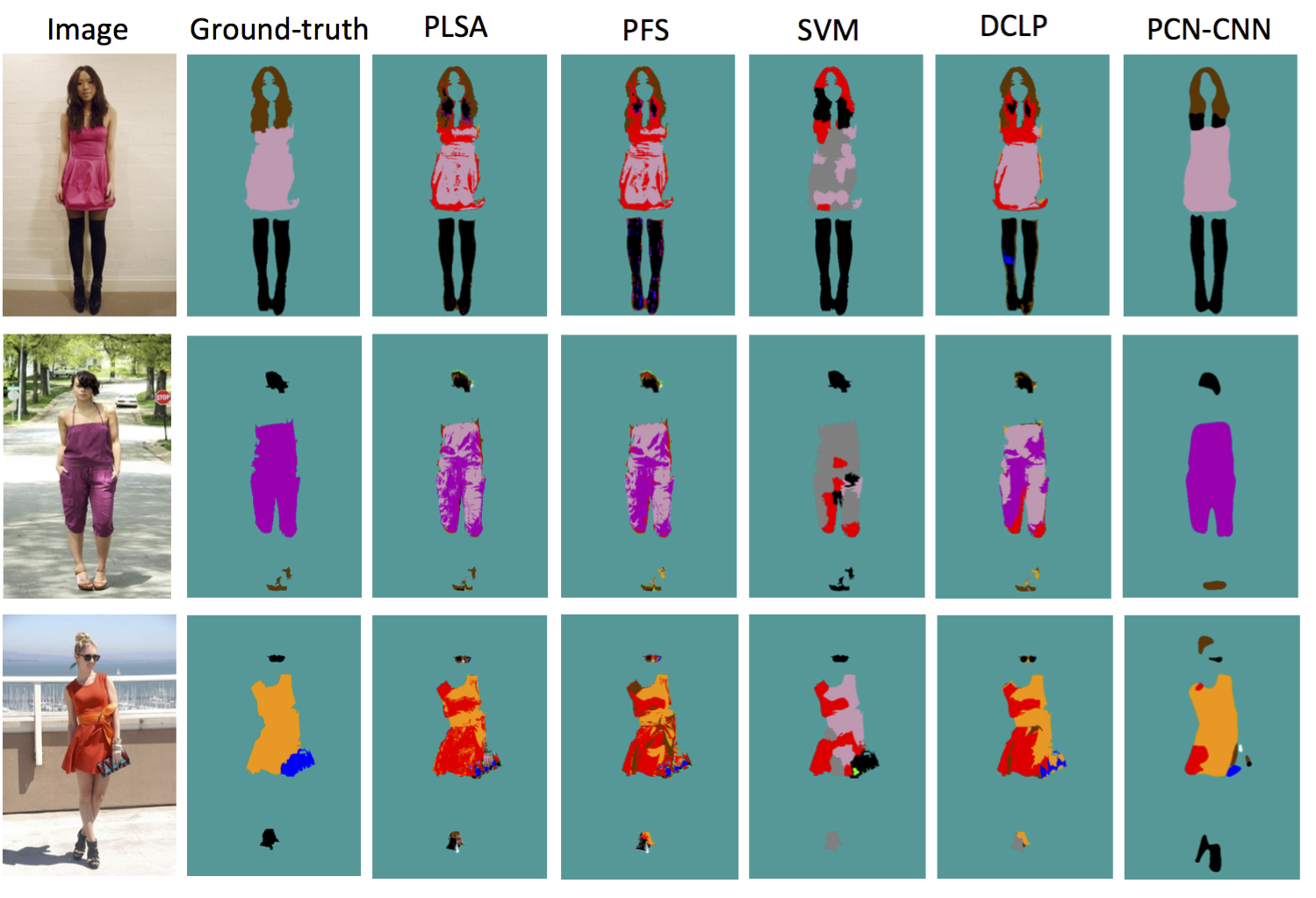

Qualitative results:

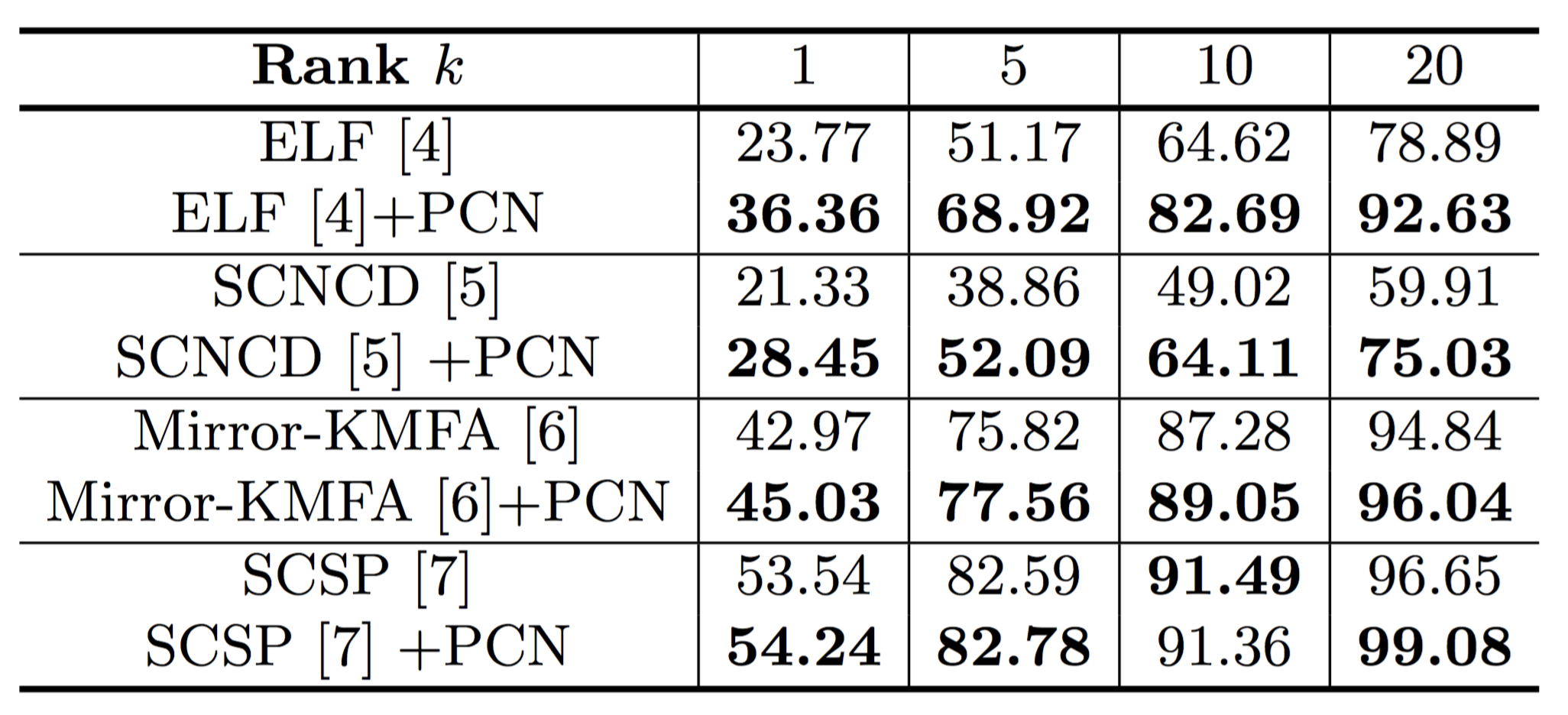

To explore the effectivenss of our method, we combine the region-level color names generated by PCN-CNN with several existing visual descriptors for the task of person re-identification, and test the performance on the widely used VIPeR dataset [3].

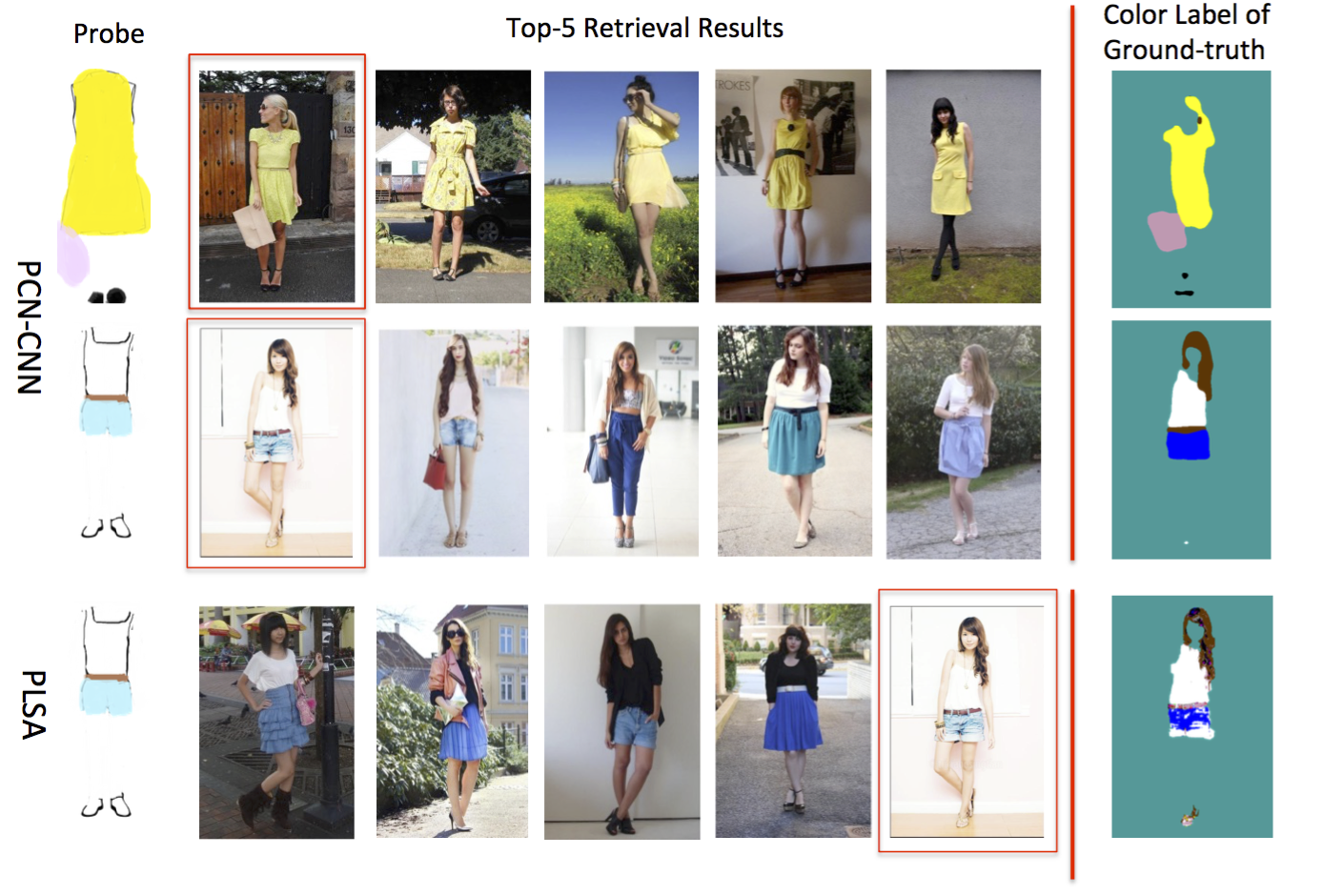

Moreover, we show the possibility of "retrieve with colors" by our PCN-CNN features.

[1] Zheng, L., Shen, L., Tian, L., Wang, S., Wang, J., Tian, Q.: Scalable person re- identification: A benchmark. In: International Conference on Computer Vision (ICCV) (2015)

[2] Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556 (2014) [3] Gray, D., Brennan, S., Tao, H.: Evaluating appearance models for recognition, reacquisition, and tracking. In: International Workshop on Performance Evaluation for Tracking and Surveillance (2007) [4] Gray, D., Tao, H.: Viewpoint invariant pedestrian recognition with an ensemble of localized features. In: European Conference on Computer Vision (ECCV), pp.262–275. Springer (2008) [5] Yang, Y., Yang, J., Yan, J., Liao, S., Yi, D., Li, S.Z.: Salient color names for person re-identification. In: European Conference on Computer Vision (ECCV) (2014) [6] Chen, Y.C., Zheng, W.S., Lai, J.: Mirror representation for modeling view-specific transform in person re-identification. In: International Joint Conference on Artifi- cial Intelligence (IJCAI) (2015) [7] Chen, D., Yuan, Z., Chen, B., Zheng, N.: Similarity learning with spatial con- straints for person re-identification. In: Conference on Computer Vision and Pat- tern Recognition (CVPR) (2016)