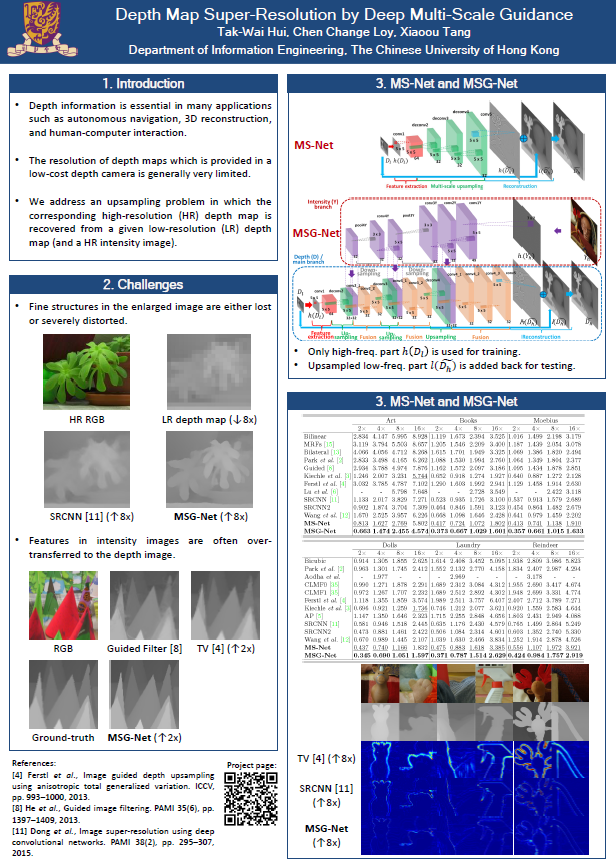

Depth Map Super-Resolution by Deep Multi-Scale Guidance

Tak-Wai Hui1, Chen Change Loy1,2, and Xiaoou Tang1,2

1 Department of Information Engineering,

The Chinese University of Hong Kong

2

Shenzhen Institutes of Advanced Technology, Chinese Academy of Sciences

European Conference on Computer Vision (ECCV) 2016, Amsterdam, The Netherlands

Abstract

Depth boundaries often lose sharpness when upsampling from low-resolution (LR) depth maps especially at large upscaling factors. We present a new method to address the problem of depth map super resolution in which a high-resolution (HR) depth map is inferred from a LR depth map and an additional HR intensity image of the same scene. We propose a Multi-Scale Guided convolutional network (MSG-Net) for depth map super resolution. MSG-Net complements LR depth features with HR intensity features using a multi-scale fusion strategy. Such a multi-scale guidance allows the network to better adapt for upsampling of both fine- and large-scale structures. Specifically, the rich hierarchical HR intensity features at different levels progressively resolve ambiguity in depth map upsampling. Moreover, we employ a high-frequency domain training method to not only reduce training time but also facilitate the fusion of depth and intensity features. With the multiscale guidance, MSG-Net achieves state-of-art performance for depth map upsampling.

Challenges

1. Fine structures in enlarged image are either lost or severely distorted (depending on the scale factor used) in LR image because they cannot be fully represented by the limited spatial resolution.

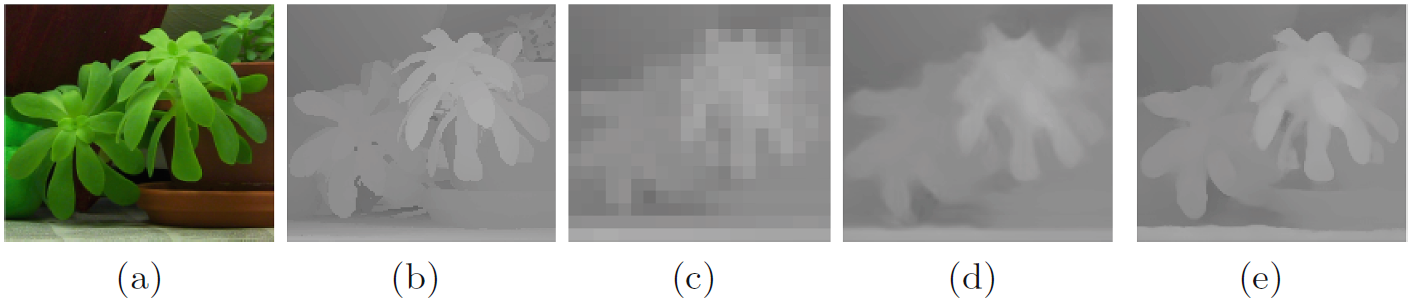

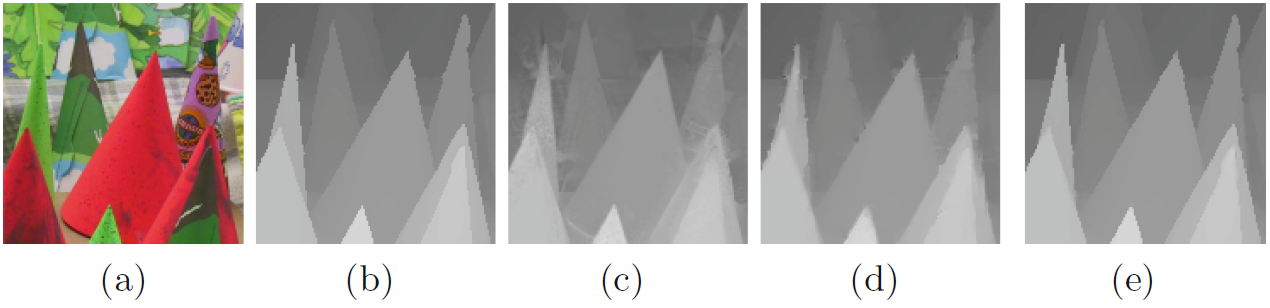

Ambiguity in upsampling depth map. (a) Color image. (b) Ground truth. (c) (Enlarged) LR depth map downsampled by a factor of 8. Results for upsampling: (d) SRCNN, (e) Our solution without ambiguity problem.

2. Features in intensity images are often over-transferred to the depth image.

Over-texture transfer in depth map refinement and upsampling using intensity guidance. (a) Color image. (b) Ground truth. (c) Refinement of (b) using (a) by Guided Filtering (r = 4, ε = 0.012). Results of using (a) to guide the 2x upsampling of (b): (d) Ferstl et al., (e) Our solution.

Contributions

(1) We propose a new framework to address the problem of depth map upsampling by complementing a LR depth map with the corresponding HR intensity image using a multiscale guidance architecture (MSG-Net). To the best of our knowledge, MSG-Net is the FIRST convolutional neural network which attempts to upsample depth images using multiscale guidance.

(2) With the introduction of multi-scale upsampling architecture, our compact single-image upsampling network (MS-Net) in which no guidance from HR intensity image has already outperformed most of the state-of-the-art methods requiring guidance from HR intensity image.

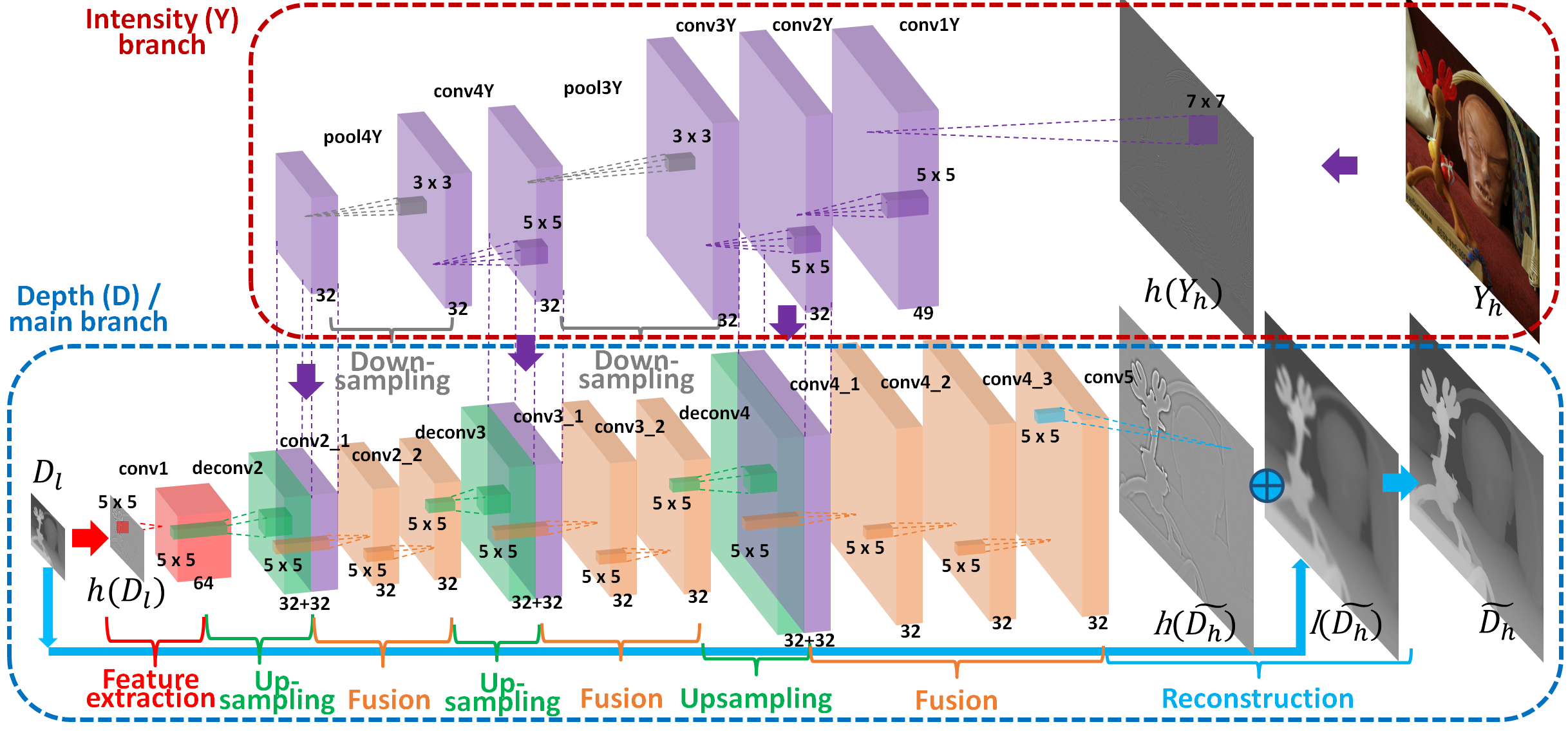

Multi-Scale Guidance Network (MSG-Net)

We propose a new framework to address the problem of depth map upsampling by complementing a LR depth map with the corresponding HR intensity image using a convolutional neural network in a multiscale guidance architecture. Flat intensity patches (regardless of what intensity values they possess) do not contribute much improvement in depth super resolution. Therefore, we complement depth features with the associated intensity features in high-frequency domain.

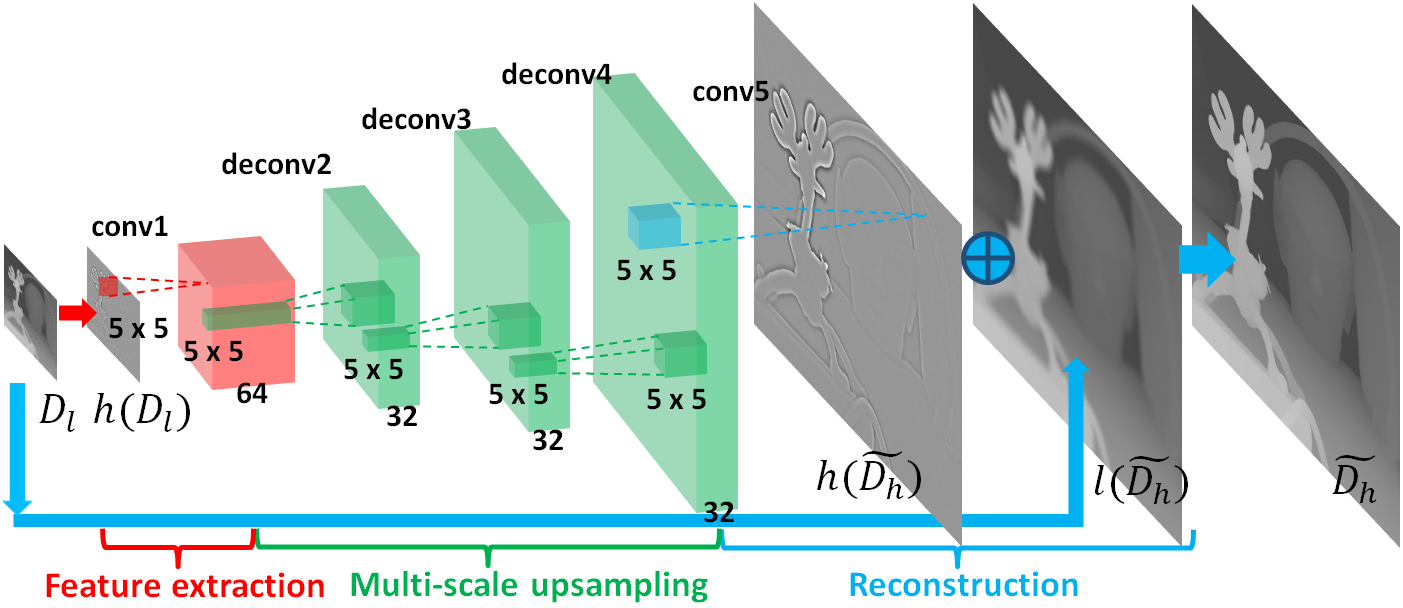

A Special Case: Single-Image Super-Resolution Using Multi-Scale Network (MS-Net)

Removing the (intensity) guidance branch and fusion stages of MSG-Net, it reduces to a compact multi-scale network (MS-Net) for super-resolving images.

Experimental Results

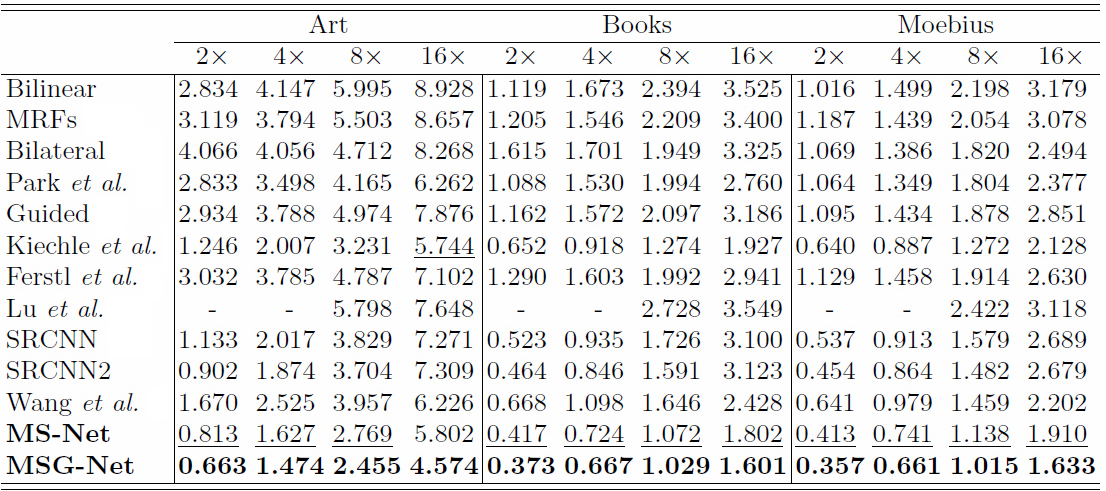

Quantitative comparison (in RMSE) on Middlebury dataset.

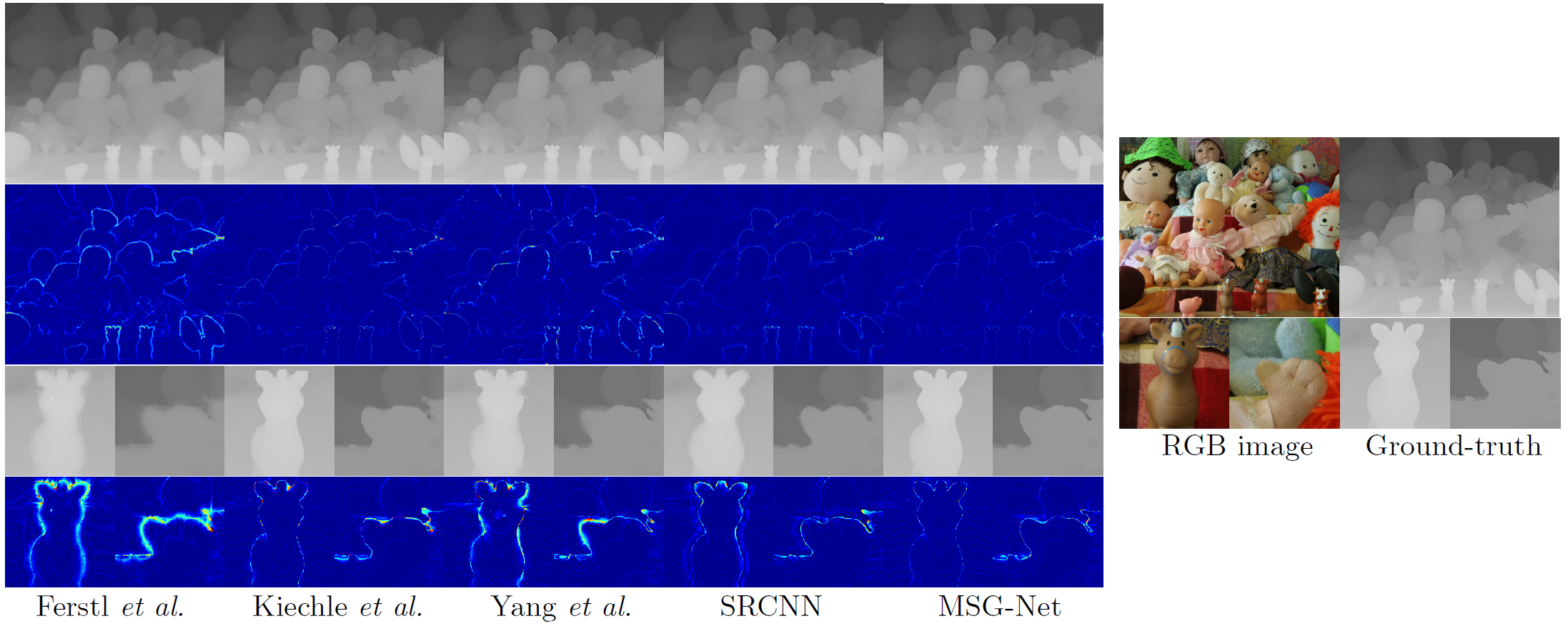

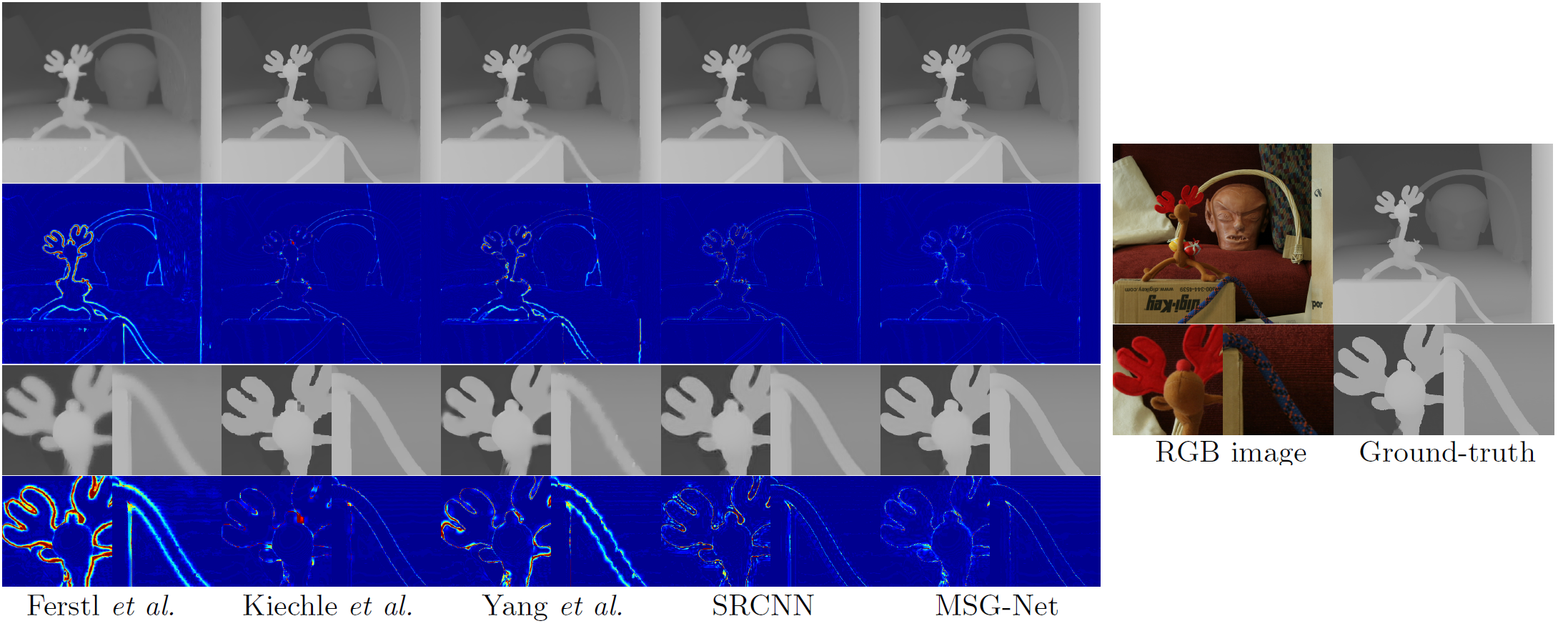

Upsampled depth maps and error maps with the upscaling factor 8 for Dolls and Reindeer on Middlebury dataset.

Materials

Citation

@inproceedings{hui16msgnet,

author = {Tak-Wai Hui and Chen Change Loy and Xiaoou Tang},

title = {Depth Map Super-Resolution by Deep Multi-Scale Guidance},

booktitle = {Proceedings of European Conference on Computer Vision (ECCV)},

page = {353--369},

year = {2016}}