In this project, I studied the camera model and got a result which is a little different from what is introduced in the lecture. I compared these two models in the experiment part. Bundle Adjustment is implemented but is limited to the rotation matrix, other variables can be added for better alignment requirement.

Since there is a perfect tutorial by Richard Szeliski[1] and nearly all the details in the lecture, I only give the details about the camera model and bundle adjustment. Then I outline the main procedure in my code, followed by the experiment results.

Binary File with Sample Images

Since there are different image sizes in the sample of "antcrim_Piazzanavona", some changes are needed to the identical focal length Camera Model. Here I use the CCD Camera Model (Richard Hartley and Andrew Zisserman, Multiple View Geometry in Computer Vision, Edition 1, pp143).

Suppose the number of pixels per unit distance in image coordinates are mx and my in the x and y directions, then the transform from world coordinates to pixel coordinates is,

So, a 3D point P(X,Y,Z) becomes the image point p(x/w,y/w) in terms of pixel dimensions, where fx = f * mx, fy = f * my, represent the focal length of the camera in terms of pixel dimensions in the x and y direction respectively, and w = Z. (x0, y0) are the coordinates in the image plane as shown in the figure of the image coordinates origin, which are usually (x0 = width / 2, y0 = height / 2).

Firstly I'd like to take another writing format of the transform above.

![]() ,

,

where,

.

.

If we set the coordinates frame center at the camera center, Z axis along the focal of the camera, the coordinates of P now become as:

![]()

where R is the 3D rotation matrix. Consequently the corresponding image point is

![]()

Now let's go further,

![]()

![]()

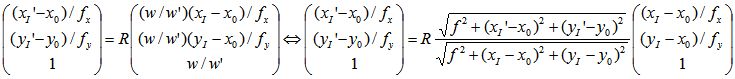

The last equation ensures us to get the 3D rotation matrix from only the image coordinates easily. Let's study it more carefully.

Product 1/w' to both side of the equations,

where w=Z and w'=Z'.

So,

![]() , and

, and

Now we get the final linear equations about the rotation matrix,

Usually,

is used and the above equations degenerate

to the same formula given in the Lecture Slice

is used and the above equations degenerate

to the same formula given in the Lecture Slice

Note: However,

the weight  has also been ignored to

get the equations in the lecture. In the experiment part, I'll test the effect

of the weight.

has also been ignored to

get the equations in the lecture. In the experiment part, I'll test the effect

of the weight.

*** It's an interesting result since we can recover the 3D rotation from purely 2D images.

The alignment accuracy can be improved by bundle adjustment that minimize the global alignment error. Suppose we have get the all rotation matrices with image i, I.e., we can get the every image's position with respect to image i. So our objective is now,

Rjpij - Rkpik ≈ 0 or min |Rjpij - Rkpik|2

where Rjpij maps point in image j to its corresponding point image i.

With Rodrigues' rotation formula

![]() , we can

update the rotation matrix by

R(I

+

DR),

where

, we can

update the rotation matrix by

R(I

+

DR),

where

So the above objective function can be estimated by Least Square estimation of ω.

I give the details above about what is a little different from the lecture. Here I will outline the main steps in my project without detailed iteration for most of them are based on the lecture.

Above all but not necessarily, one can do radial distortion to overcome camera's defects.

Choose one motion model to model camera motions while we got photos. In this project, a simple rotation model is used.

Warp images to spherical coordinates.

Pair-wise alignment using RANSAC.

Bundle Adjustment of the rotation matrix.

Image Blending. Many attractive works appears about this step. I use the simple linear blending here.

I name the camera model with weight as Extended Camera Model :), and compare it to the model ignoring the weight. One can expect that there should not be too much difference on them, and it is true. The rotation matrices of "mountain" are posted below, and the final panoramas are nearly the same.

| 0.tga | model with weight | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 1 |

| without weight | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 1 | |

| 1.tga | model with weight | 0.964506 | 0.057298 | -0.257768 | -0.056551 | 0.998346 | 0.010318 | 0.257933 | 0.004625 | 0.966152 |

| without weight | 0.964361 | 0.056962 | -0.258386 | -0.056235 | 0.998365 | 0.010208 | 0.258546 | 0.004686 | 0.965988 | |

| 2.tga | model with weight | 0.802176 | 0.034114 | -0.596113 | 0.024046 | 0.995711 | 0.08934 | 0.596604 | -0.086 | 0.797915 |

| without weight | 0.801818 | 0.033886 | -0.596607 | 0.024323 | 0.995713 | 0.089244 | 0.597074 | -0.086069 | 0.797556 | |

| 3.tga | model with weight | 0.965114 | 0.036092 | -0.259329 | 0.02201 | 0.975765 | 0.217713 | 0.260902 | -0.215826 | 0.94093 |

| without weight | 0.965026 | 0.035585 | -0.259727 | 0.022572 | 0.975786 | 0.217558 | 0.26118 | -0.215812 | 0.940856 | |

| 4.tga | model with weight | 0.998837 | 0.013955 | 0.046149 | -0.025128 | 0.967588 | 0.251279 | -0.041146 | -0.252147 | 0.966814 |

| without weight | 0.998837 | 0.013942 | 0.046148 | -0.025115 | 0.96759 | 0.251273 | -0.041149 | -0.25214 | 0.966815 | |

| 5.tga | model with weight | 0.886619 | 0.045405 | 0.460266 | -0.052322 | 0.998628 | 0.002275 | -0.459531 | -0.026099 | 0.887778 |

| without weight | 0.888364 | 0.030002 | 0.458158 | -0.037125 | 0.999289 | 0.006547 | -0.457636 | -0.022825 | 0.888847 | |

| 6.tga | model with weight | 0.871266 | 0.016949 | 0.490518 | -0.141716 | 0.965524 | 0.218356 | -0.469906 | -0.25976 | 0.843631 |

| without weight | 0.872624 | 0.002082 | 0.488388 | -0.126839 | 0.966645 | 0.222508 | -0.471635 | -0.256113 | 0.843781 |

After confirming both code and algorithm carefully, I have to admit that bundle adjustment applied to rotation matrix merely has little affection on the final results. It may becomes better if the focal length and more complex motion models are considered in.

View Panorama: Mountain, Tree, Lobby, Street

(To make the panorama looks more comfortable, I crop out the black part in the left and right of the image while keeping the width of the image unchanged.)

[1] Richard Szeliski. Image Alignment and Stitching: A Tutorial. MSR technical report

[2] Richard Szeliski and Heung-Yeung Shum. Creating Full View Panoramic Image Mosaics and Environment Maps. SIGGRAPH97

[3] Brown, Matthew, Lowe, David. Automatic Panoramic Image Stitching using Invariant Features. IJCV07

[4] Brown, M. Hartley, R.I. Nister, D. Minimal Solutions for Panoramic Stitching. CVPR07

[5] Richard Hartley. Andrew Zisserman. Multi View Geometry in Computer Vision. 2nd edition.