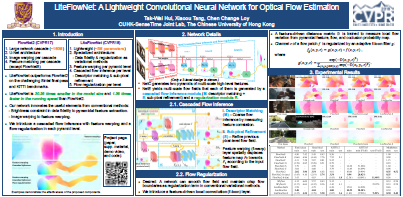

LiteFlowNet: A Lightweight Convolutional Neural Network

for Optical Flow Estimation

Tak-Wai Hui, Xiaoou Tang, and Chen Change Loy

CUHK-SenseTime Joint Lab, The Chinese University of Hong Kong

IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2018, Spotlight Presentation, Salt Lake City, Utah

Abstract

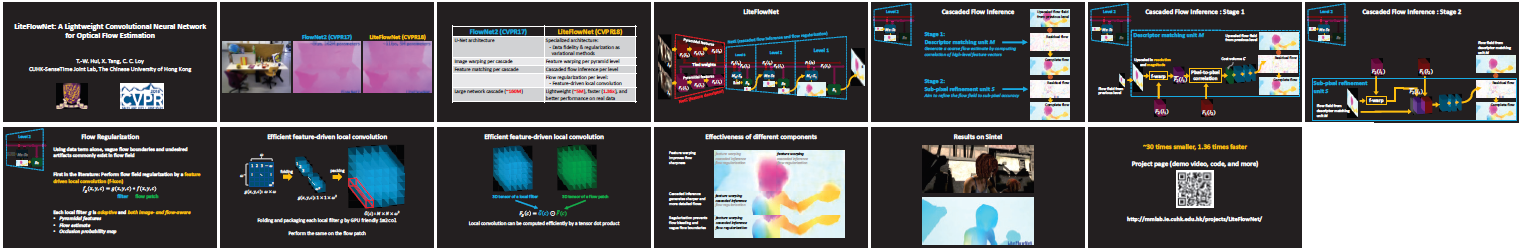

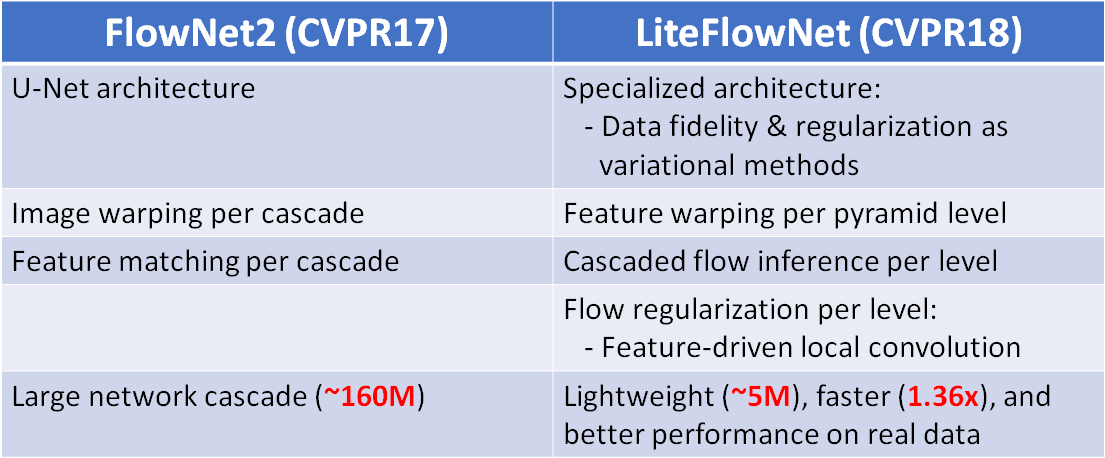

FlowNet2, the state-of-the-art convolutional neural network (CNN) for optical flow estimation, requires over 160M parameters to achieve accurate flow estimation. In this paper we present an alternative network that outperforms FlowNet2 on the challenging Sintel final pass and KITTI benchmarks, while being 30 times smaller in the model size and 1.36 times faster in the running speed. This is made possible by drilling down to architectural details that might have been missed in the current frameworks: (1) We present a more effective flow inference approach at each pyramid level through a lightweight cascaded network. It not only improves flow estimation accuracy through early correction, but also permits seamless incorporation of descriptor matching in our network. (2) We present a novel flow regularization layer to ameliorate the issue of outliers and vague flow boundaries by using a feature-driven local convolution. (3) Our network owns an effective structure for pyramidal feature extraction and embraces feature warping rather than image warping as practiced in FlowNet2.

1. Contributions

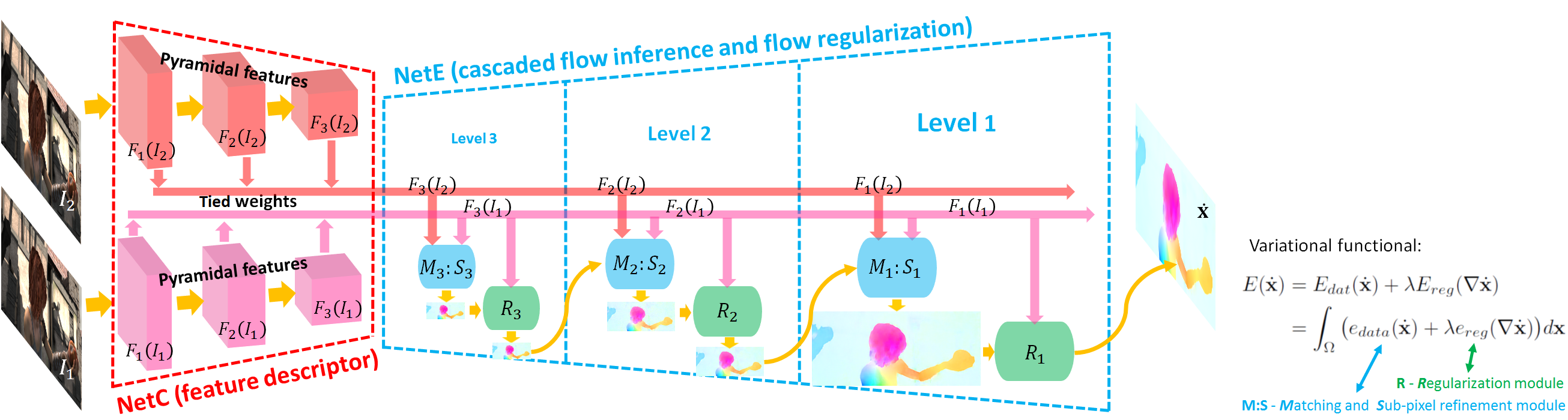

1. Pyramidal feature extraction. NetC is a two-stream network in which the filter weights are shared. Each of them functions as a feature descriptor that transforms an image to a pyramid of multi-scale high-level features.

2. Feature warping. To faciliate large-displacement flow inference, the high-level features of the second image is warped towards the high-level space of the first image by a feature warping (f-warp) layer at each pyramid level.

3. Cascaded flow inference. At each level of NetE, pixel-by-pixel matching (M) of high-level features yields coarse flow estimate. A subsequent refinement (S) on the coarse flow further improves it to sub-pixel accuracy.

4. Flow regularization. The estimated flow field can be fragile to outliers if data fidelity is used alone. Module R in NetE regularizes flow field by adapting the regularization kernel through a feature-driven local convolution (f-lcon) layer.

2. Network Details

NetC generates two pyramids of high-level features. NetE yields multi-scale flow fields that each of them is generated by a cascaded flow inference module M:S (descriptor matching unit M + sub-pixel refinement unit S) and a regularization module R. Flow inference and regularization modules correspond to data fidelity and regularization terms in conventional energy minimization methods respectively. For the ease of representation, only a 3-level design is shown.

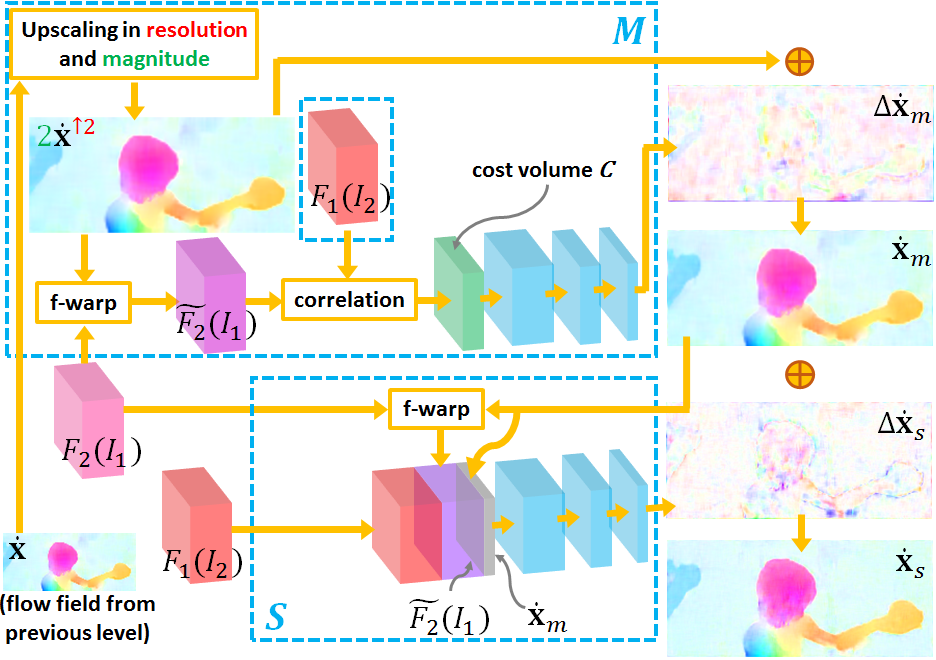

A cascaded flow inference module M:S in NetE.

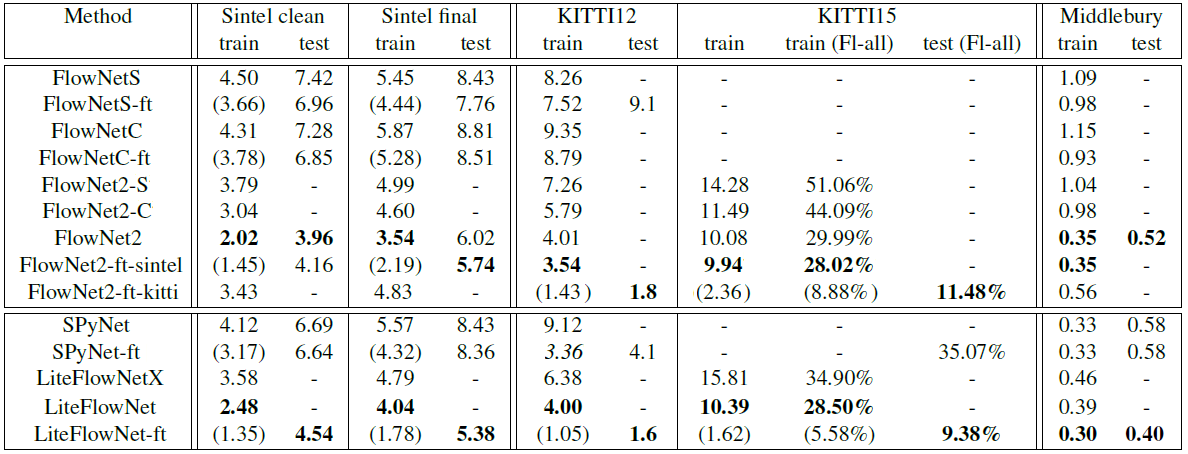

3. Experimental Results

AEE of different methods. The values in parentheses are the results of the networks on the data they were trained on, and hence are not directly comparable to the others.

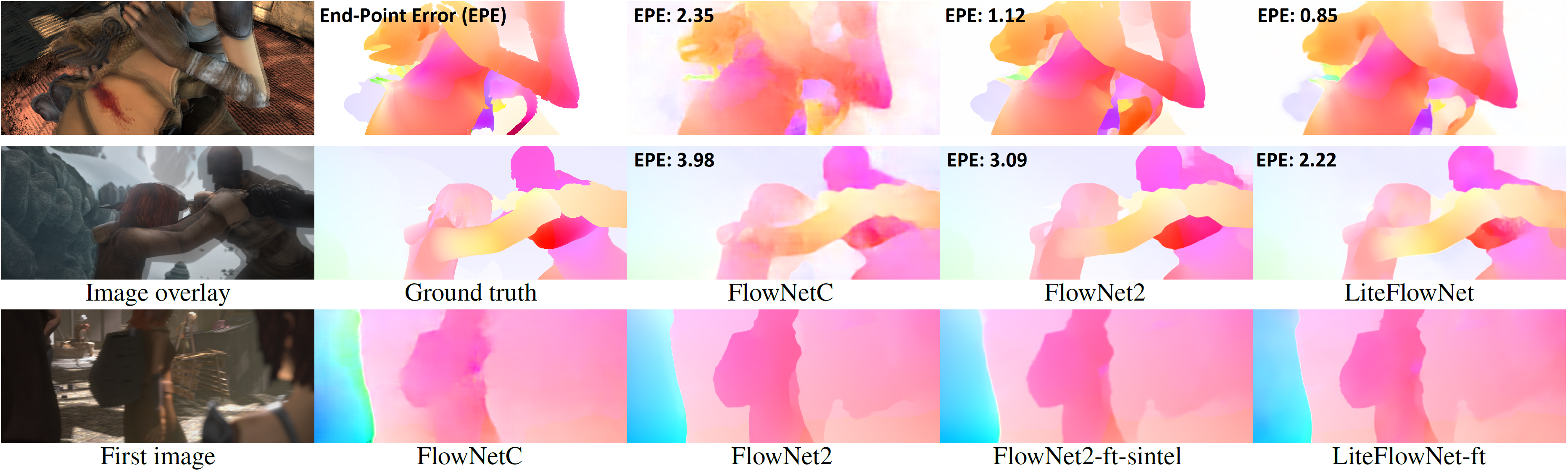

Examples of flow fields from different methods on Sintel training sets for clean (top row), final (middle row) passes, and the testing set for final pass (last row). Fine details are well preserved and less artifacts can be observed in the flow fields of LiteFlowNet.

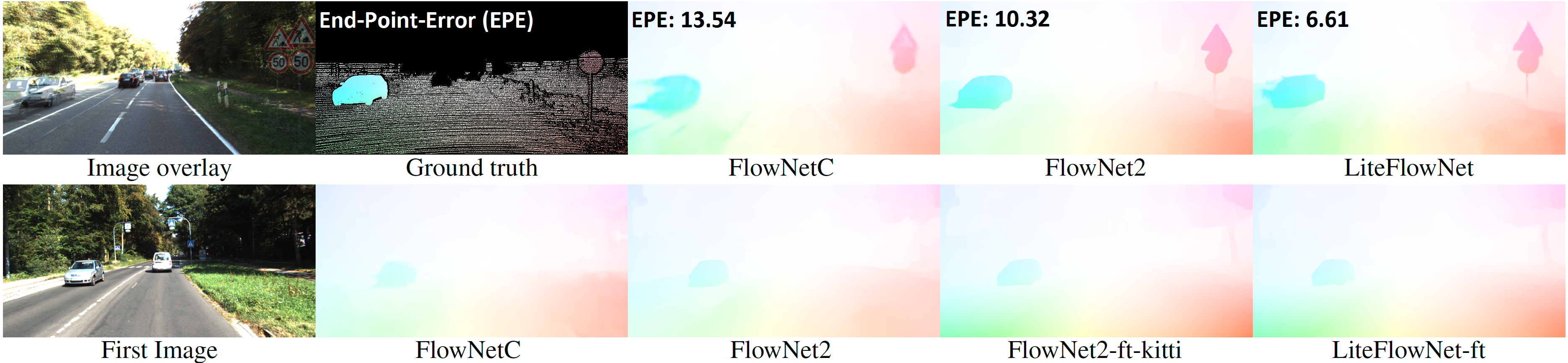

Examples of flow fields from different methods on the training set (top) and the testing set (bottom) of KITTI15.

4. Demo Video

5. Materials

Citation

@inproceedings{hui18liteflownet,

author = {Tak-Wai Hui and Xiaoou Tang and Chen Change Loy},

title = {LiteFlowNet: A Lightweight Convolutional Neural Network for Optical Flow Estimation},

booktitle = {Proceedings of IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

pages = {8981--8989},

month = {June},

year = {2018}}