Recovering Realistic Texture in Image Super-resolution by

Deep Spatial Feature Transform

(You can zoom in to see image details by hovering your mouse on the image :-) )

Abstract

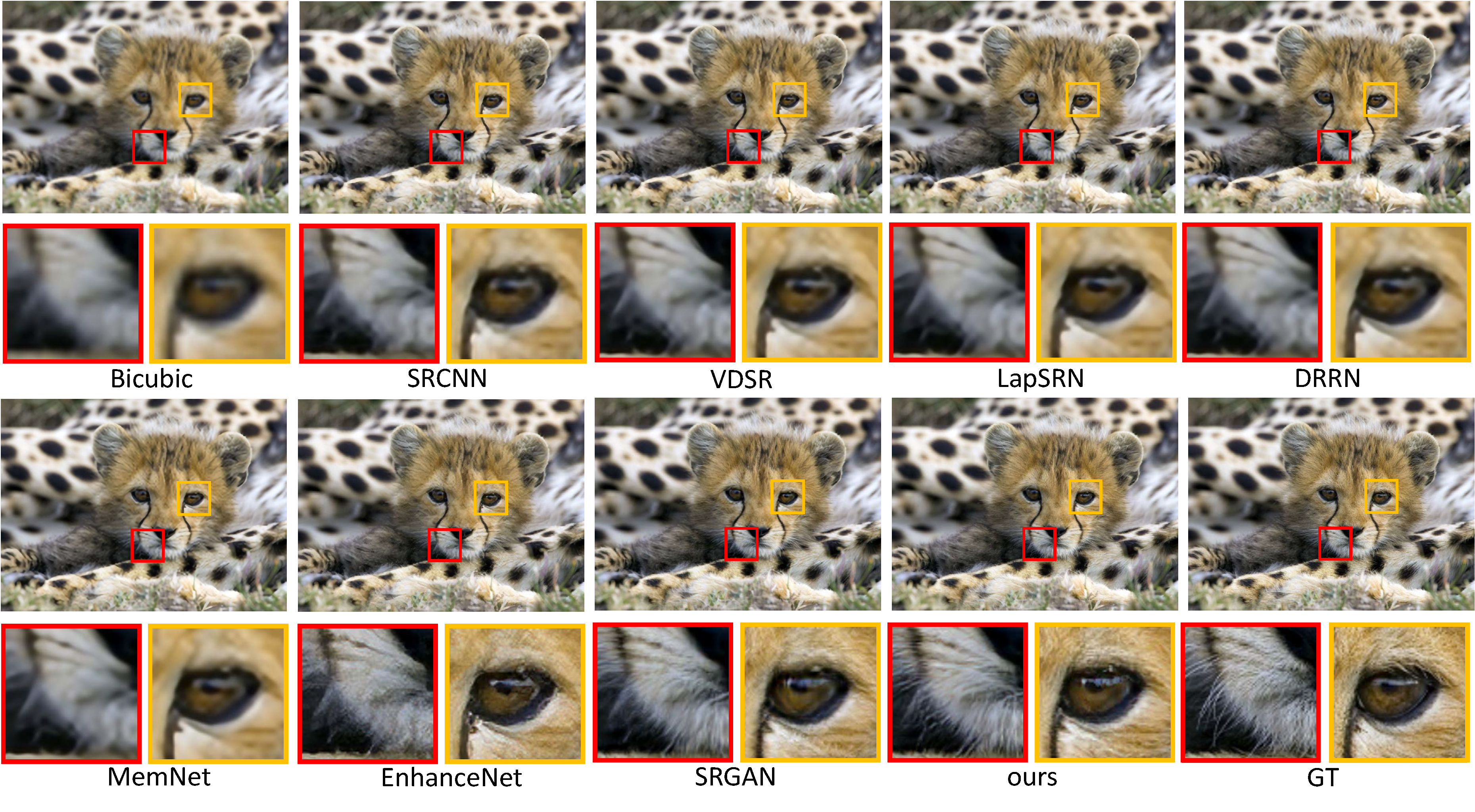

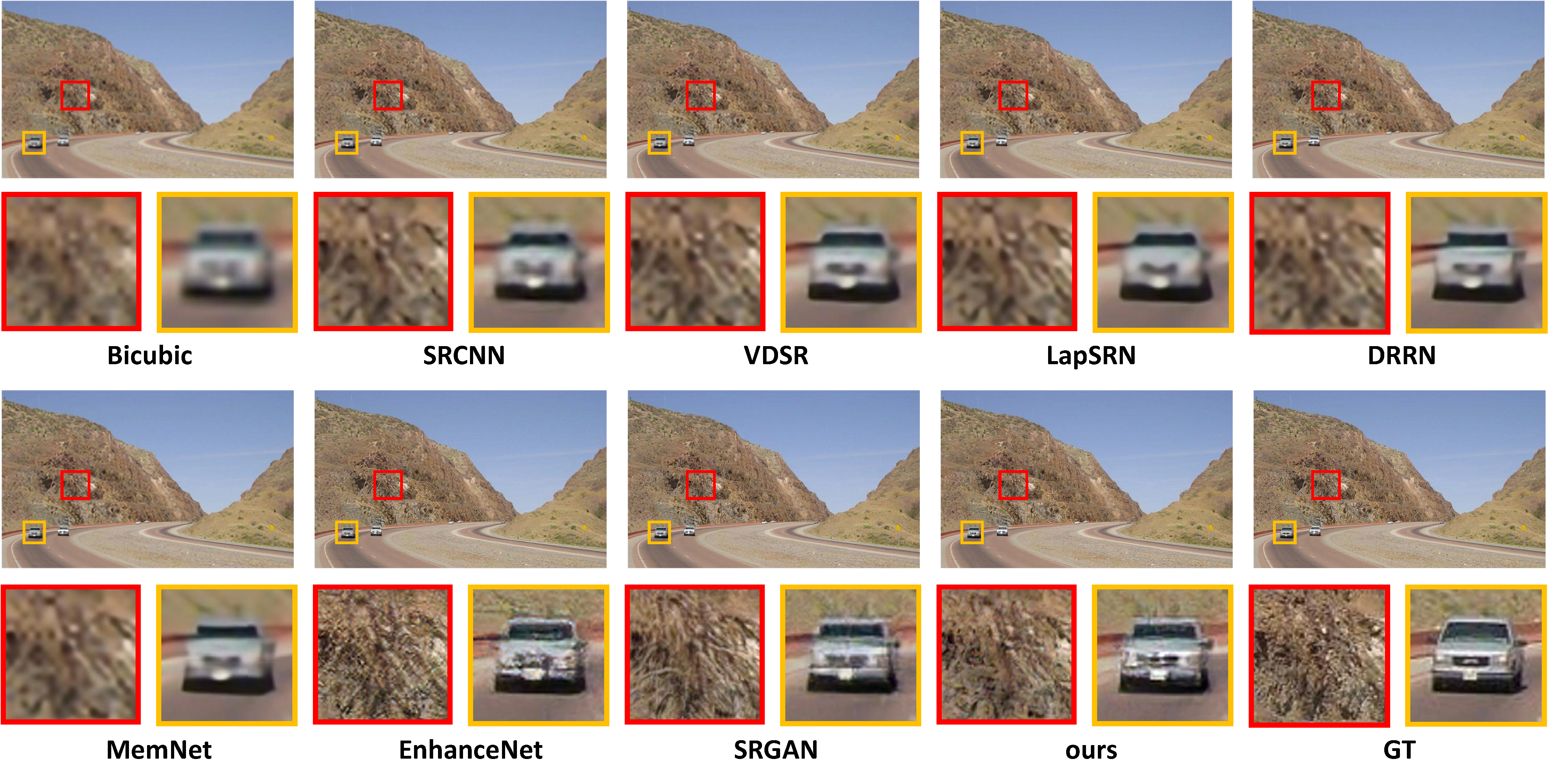

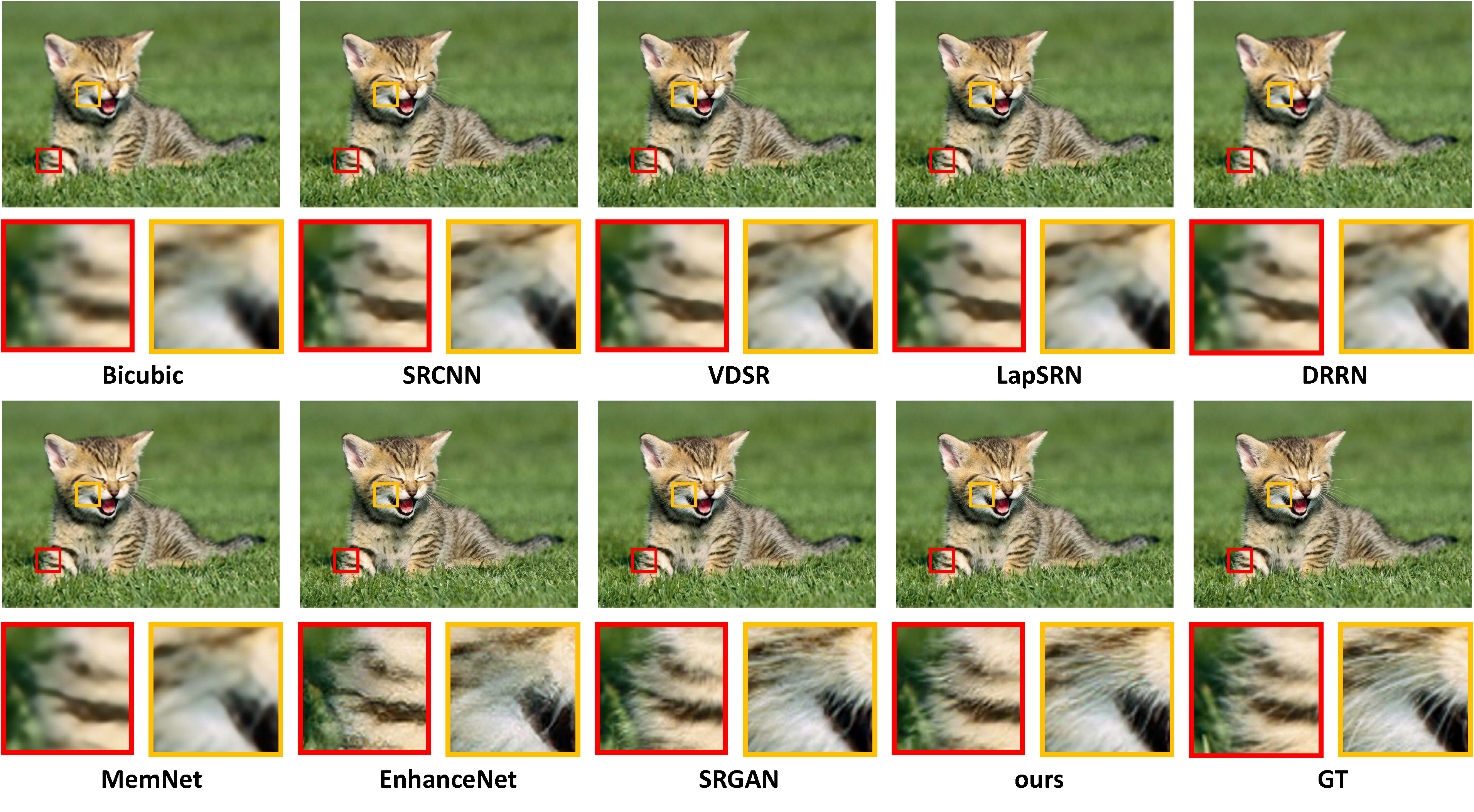

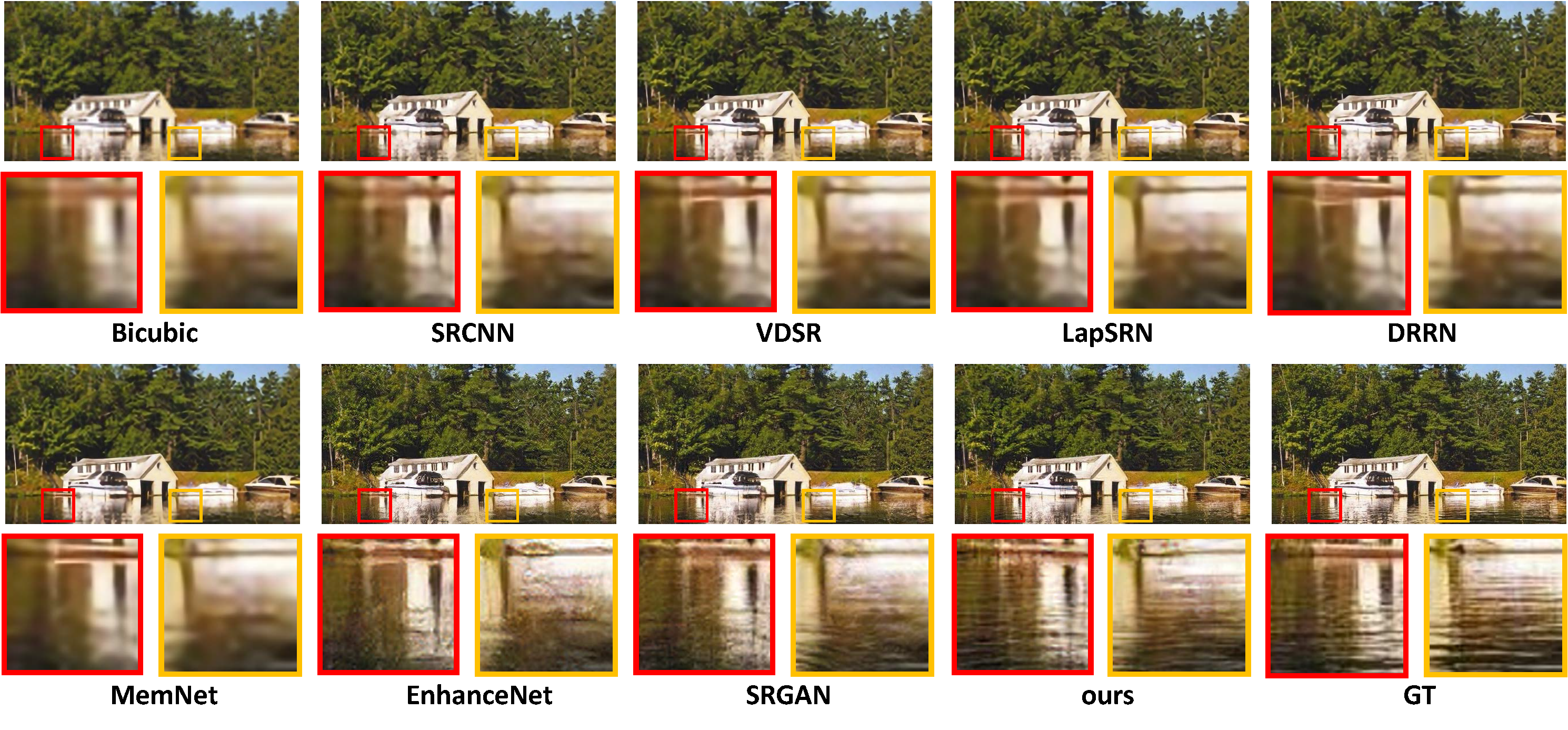

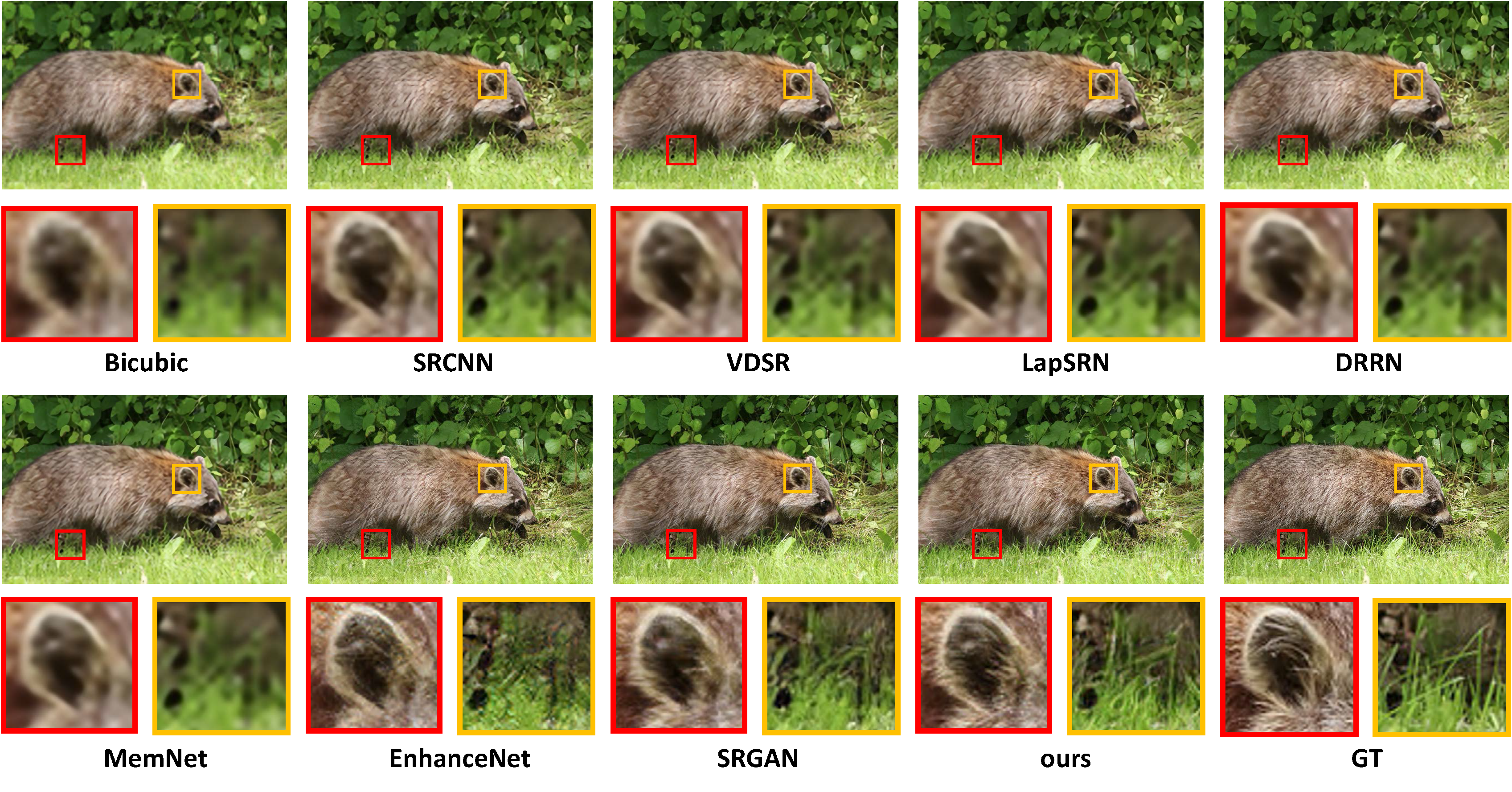

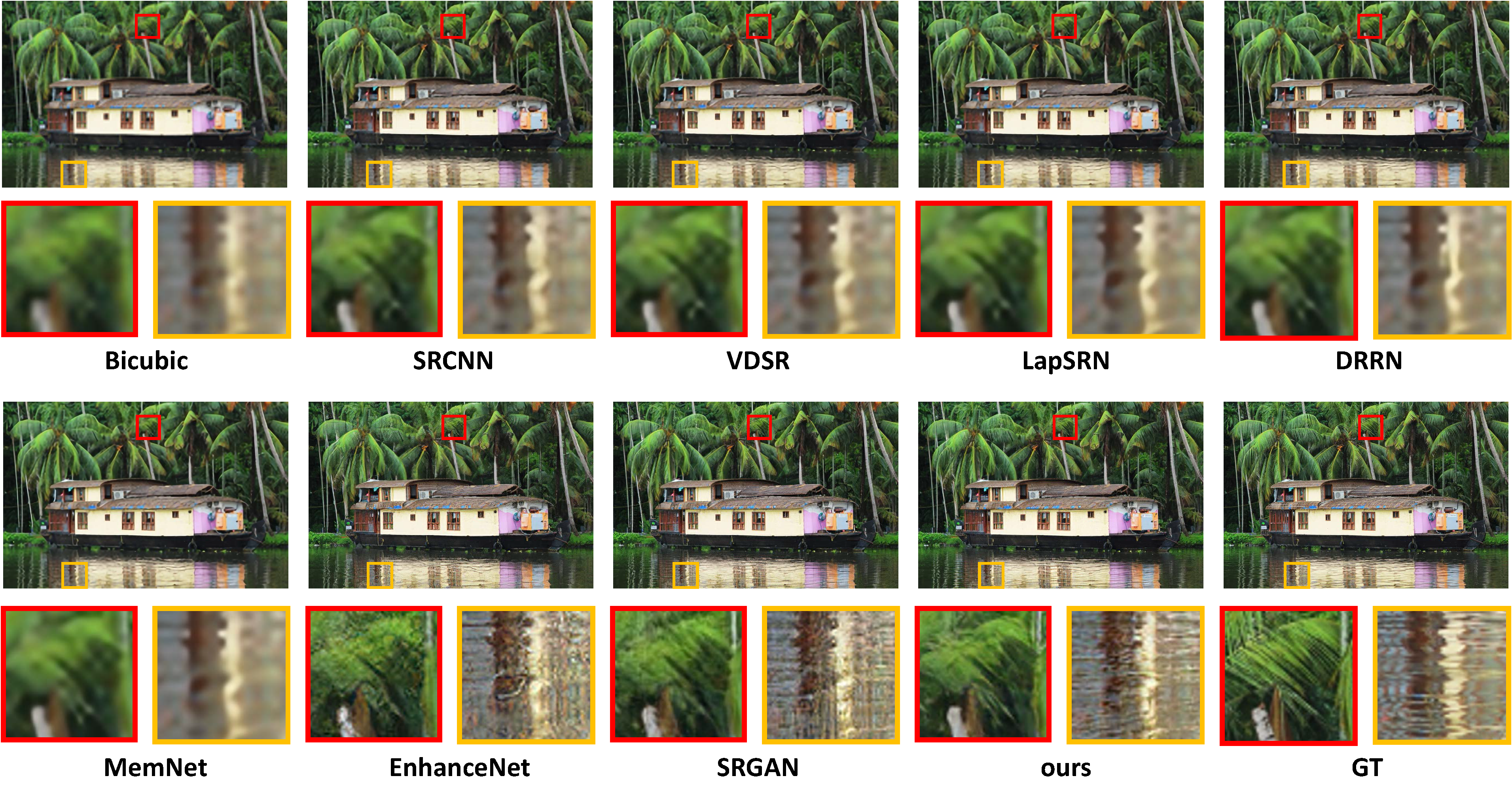

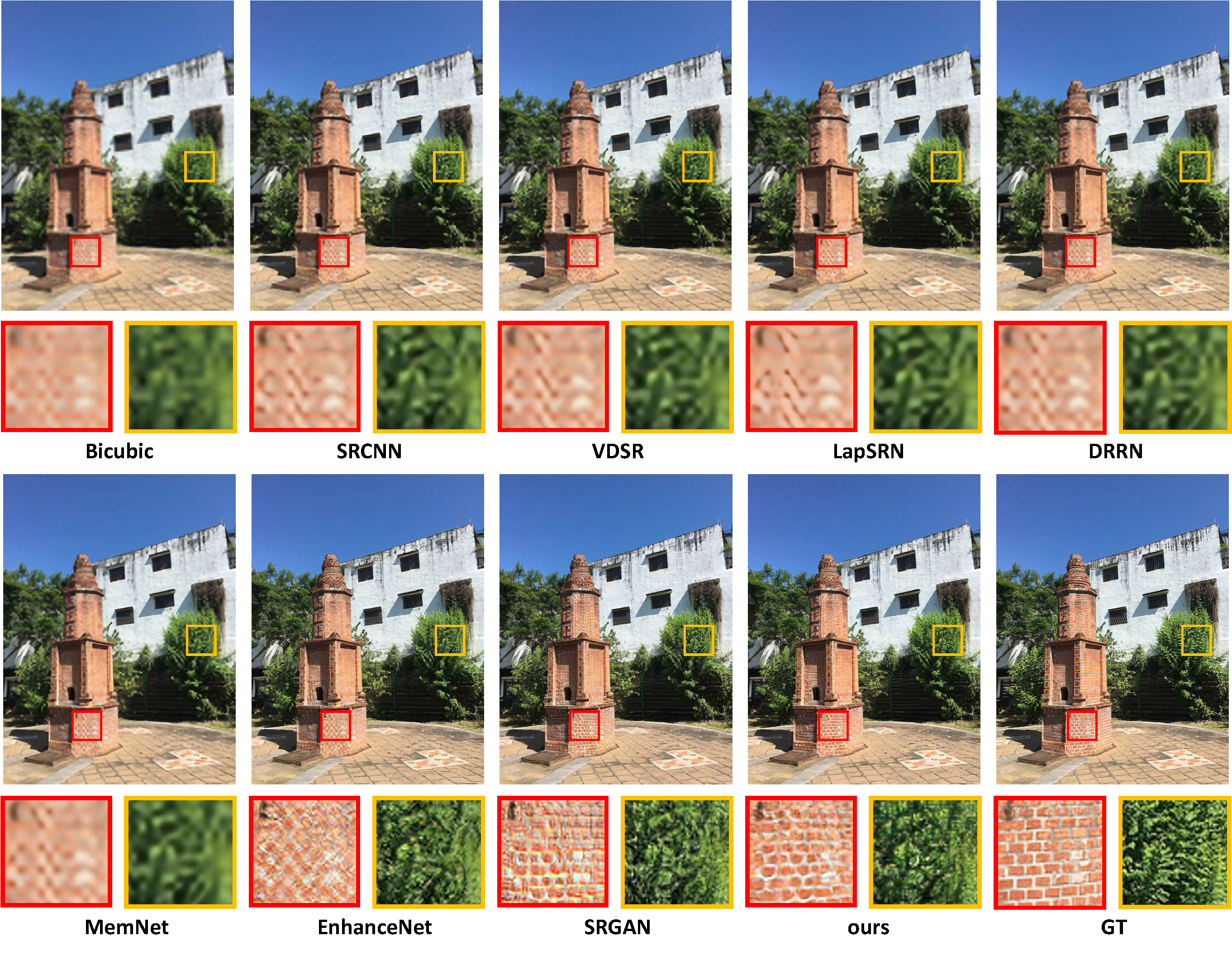

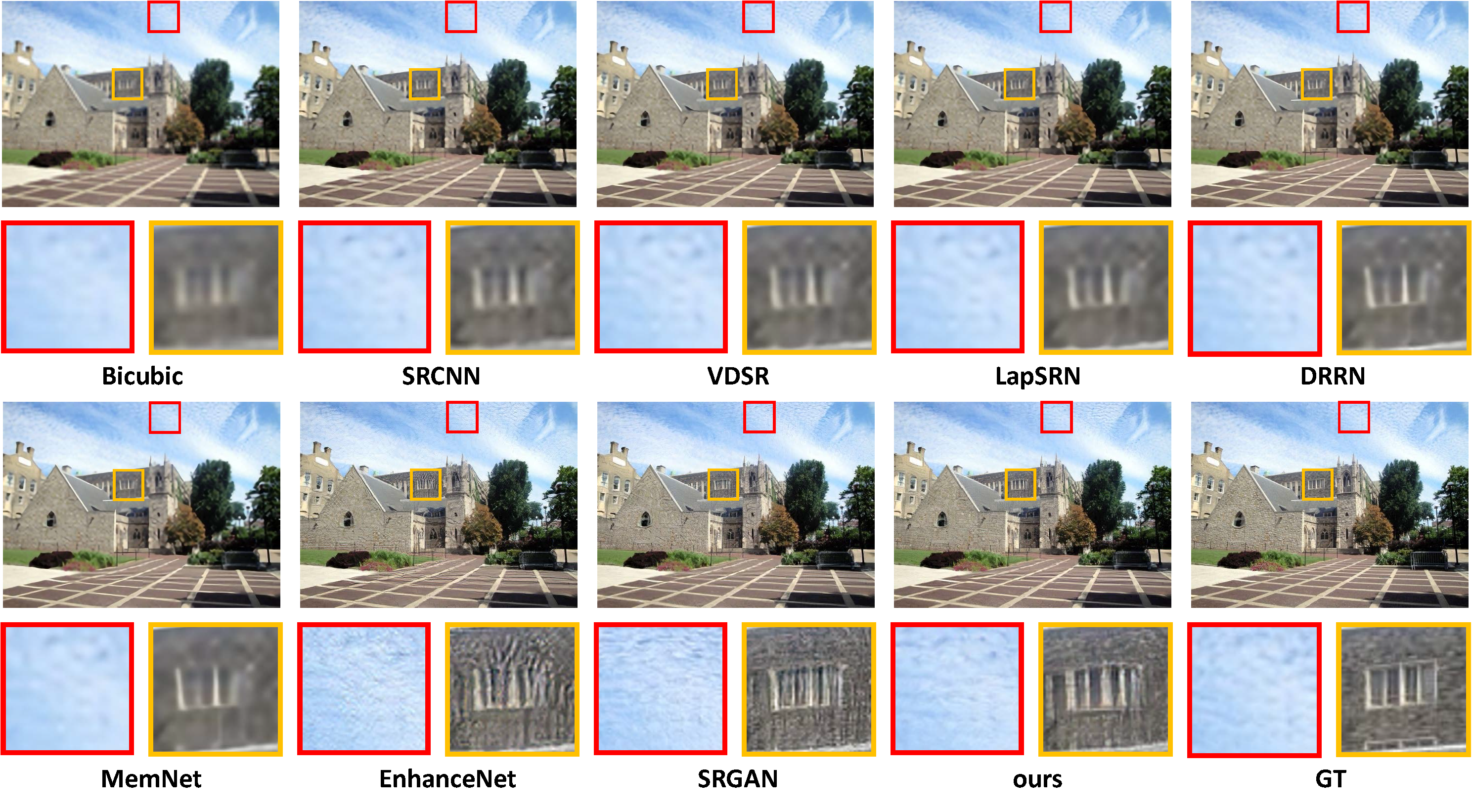

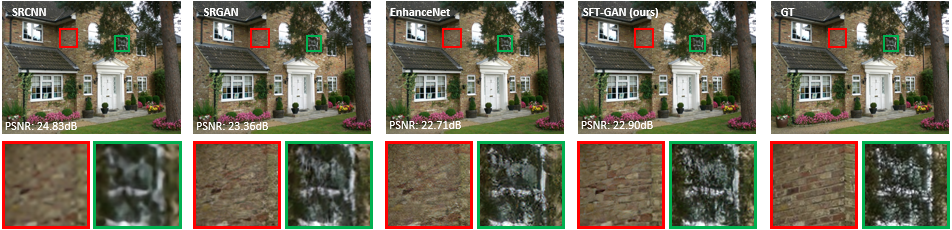

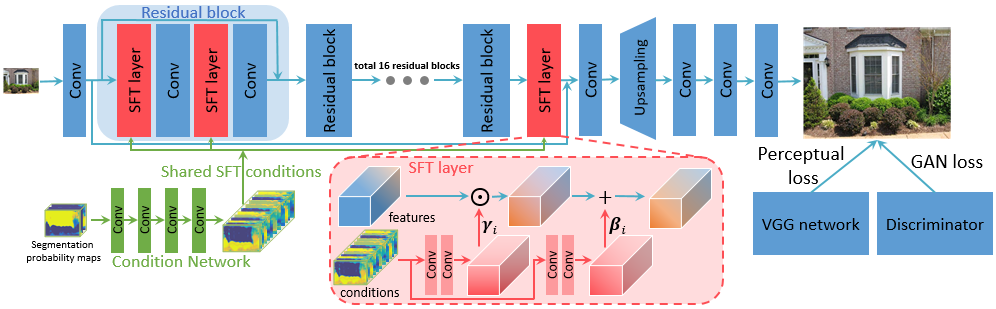

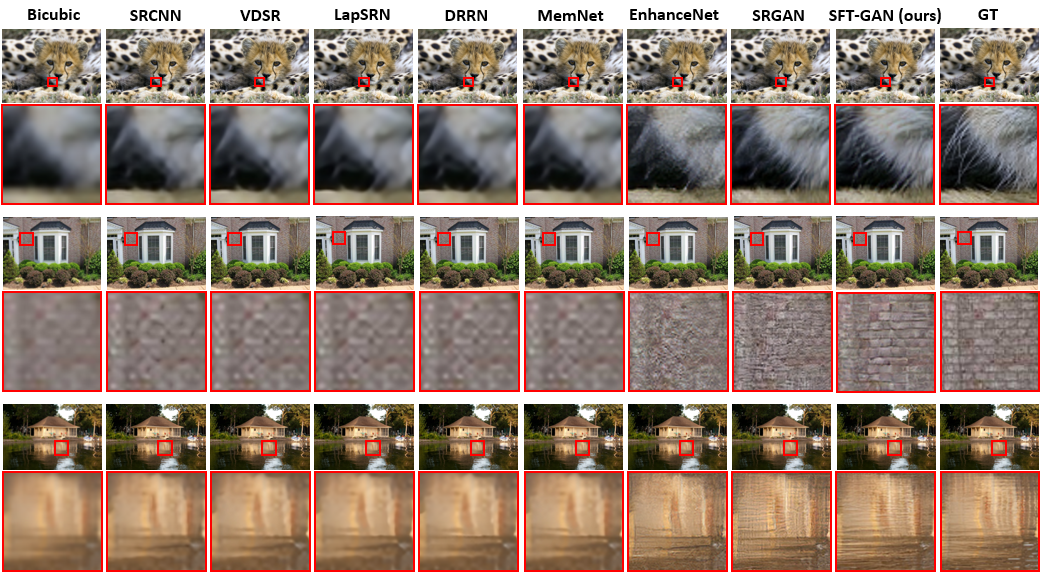

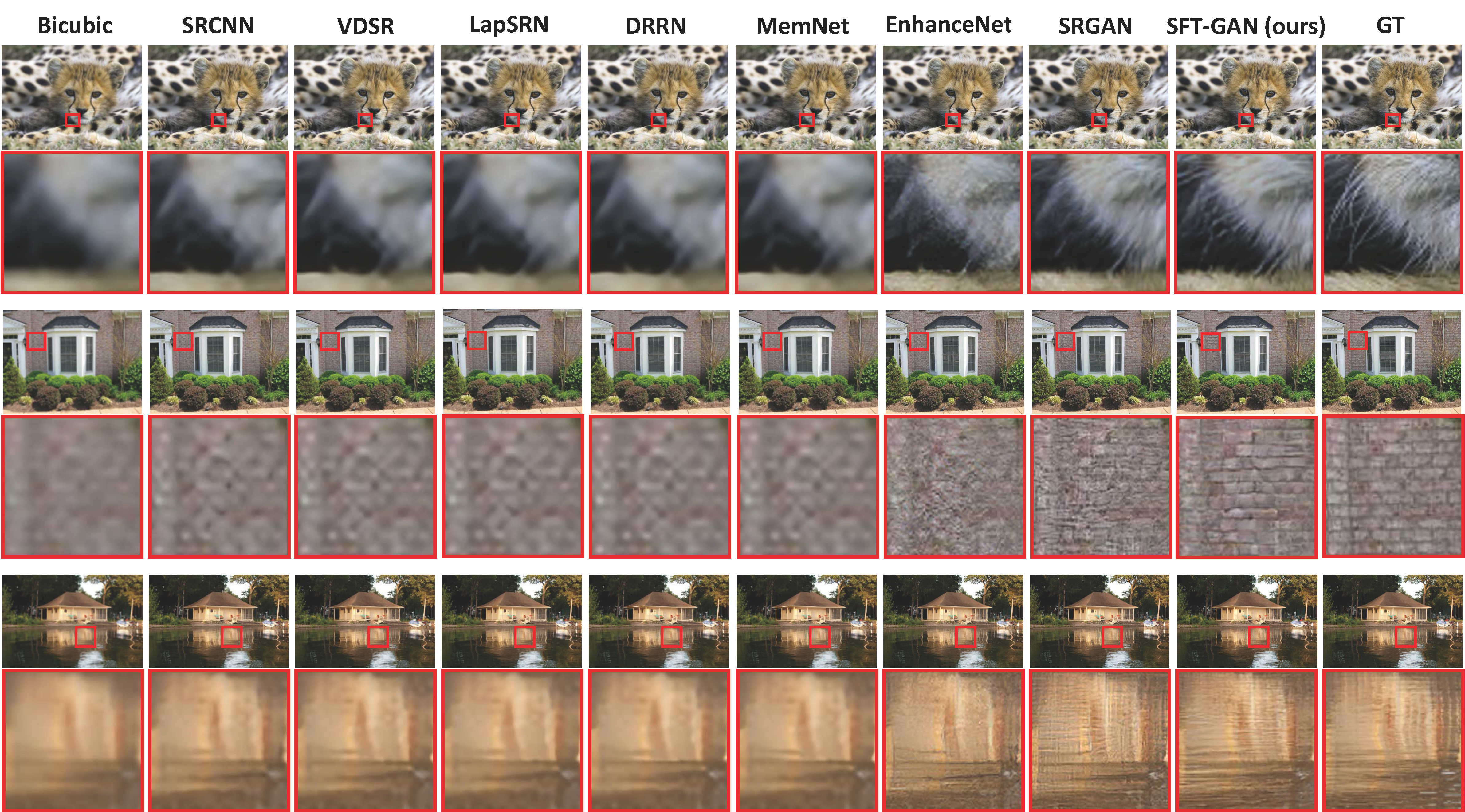

Despite that convolutional neural networks (CNN) have recently demonstrated high-quality reconstruction for single-image super-resolution (SR), recovering natural and realistic texture remains a challenging problem. In this paper, we show that it is possible to recover textures faithful to semantic classes . In particular, we only need to modulate features of a few intermediate layers in a single network conditioned on semantic segmentation probability maps. This is made possible through a novel Spatial Feature Transform (SFT) layer that generates affine transformation parameters for spatial-wise feature modulation. SFT layers can be trained end-to-end together with the SR network using the same loss function. During testing, it accepts an input image of arbitrary size and generates a high-resolution image with just a single forward pass conditioned on the categorical priors. Our final results show that an SR network equipped with SFT can generate more realistic and visually pleasing textures in comparison to state-of-the-art SRGAN and EnhanceNet.

Network Structure

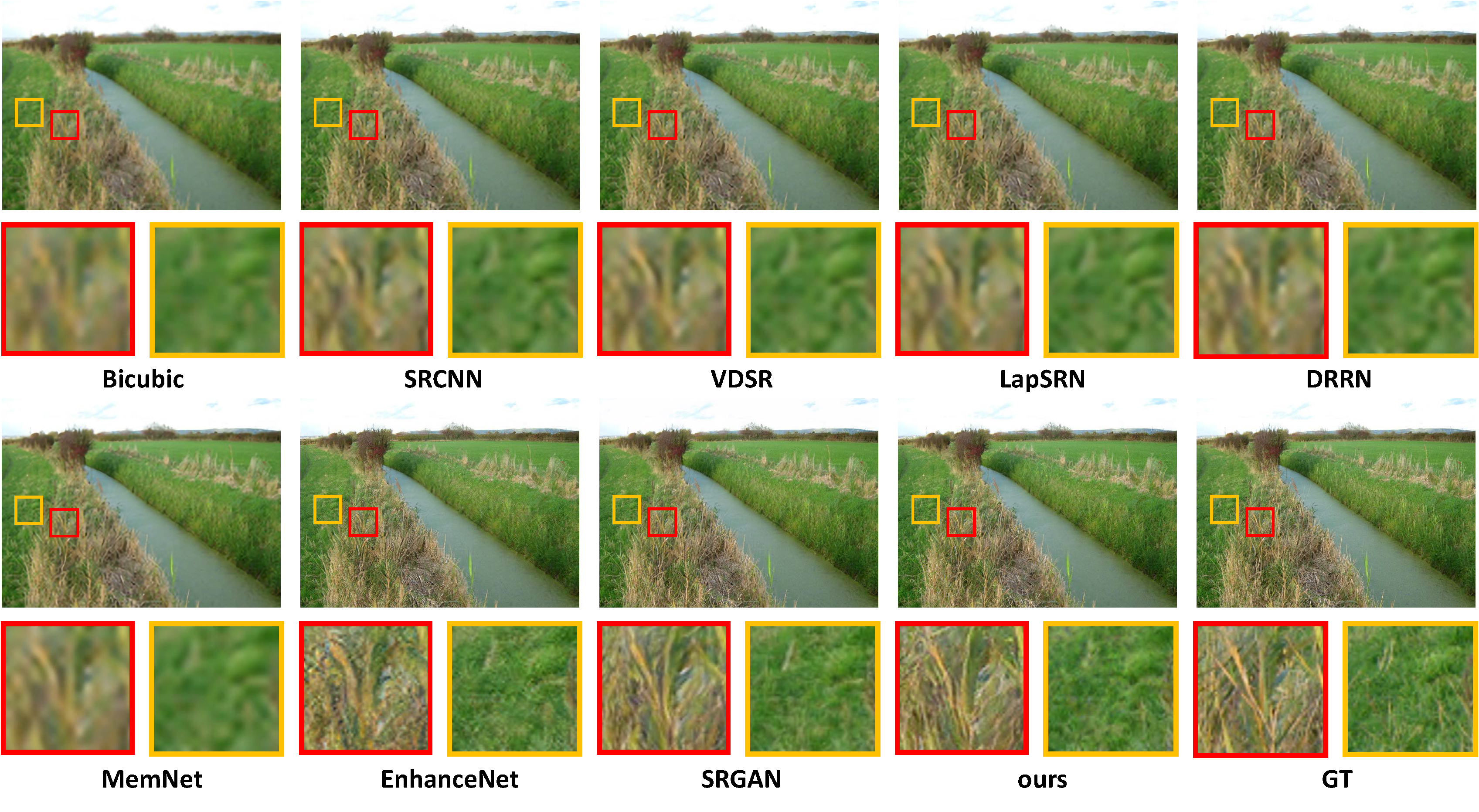

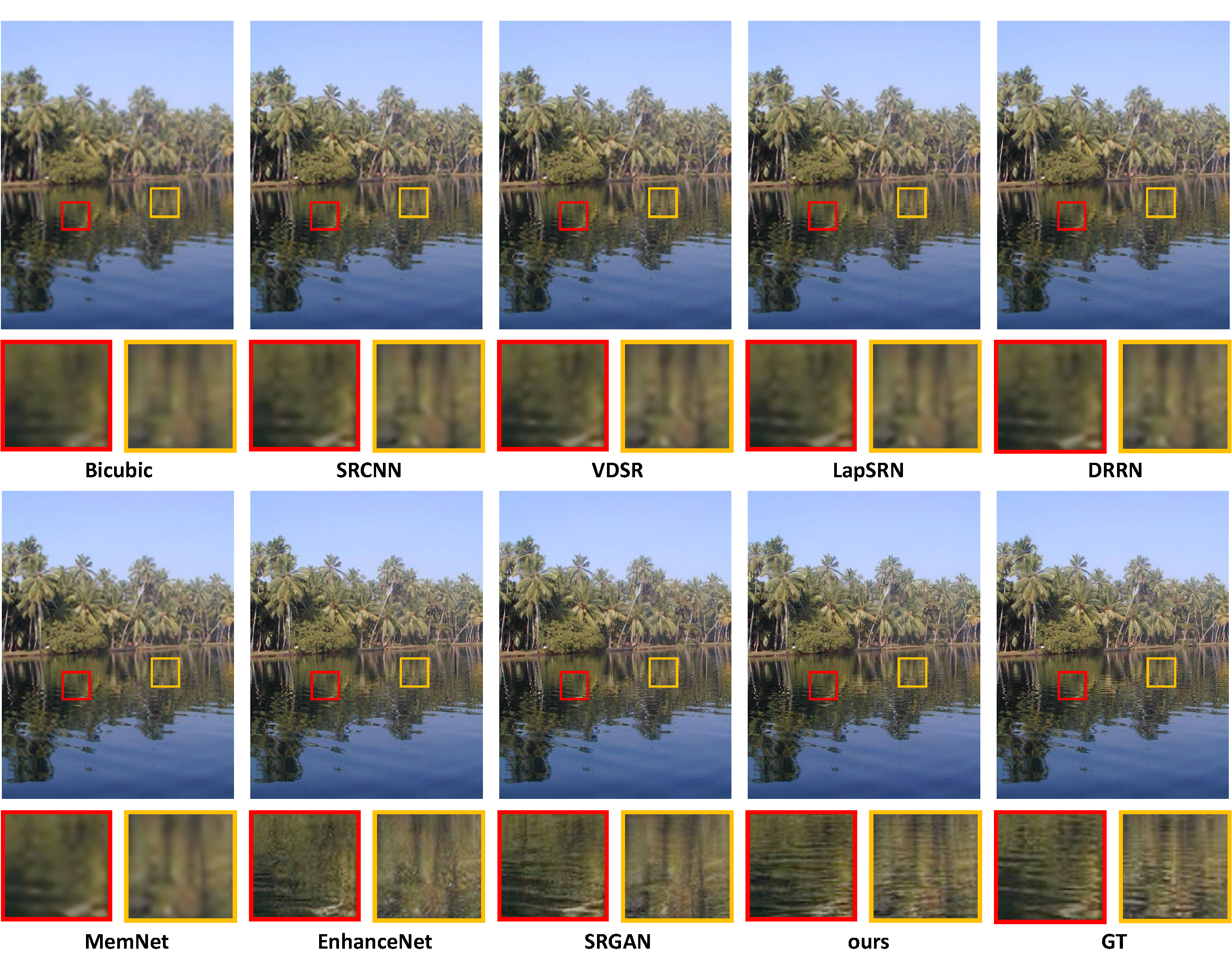

The next image shows more comparative examples. You can still zoom in by hovering your mouse on the image.

Materials

Code and Datasets

Citation

@inproceedings{wang2018sftgan,

author = {Xintao Wang, Ke Yu, Chao Dong and Chen Change Loy},

title = {Recovering Realistic Texture in Image Super-resolution by Deep Spatial Feature Transform},

booktitle = {IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2018}

}

More results

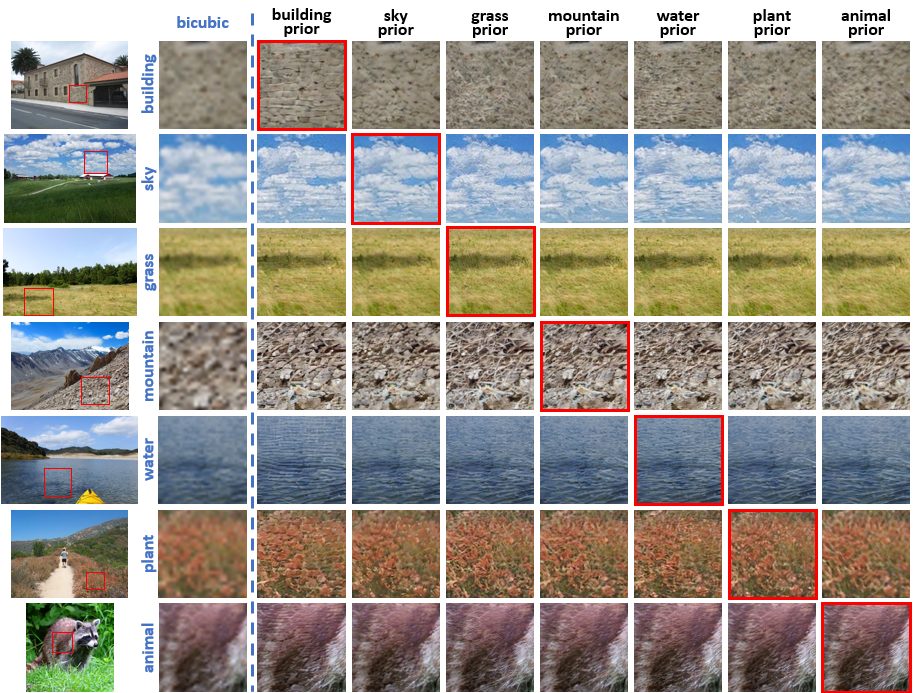

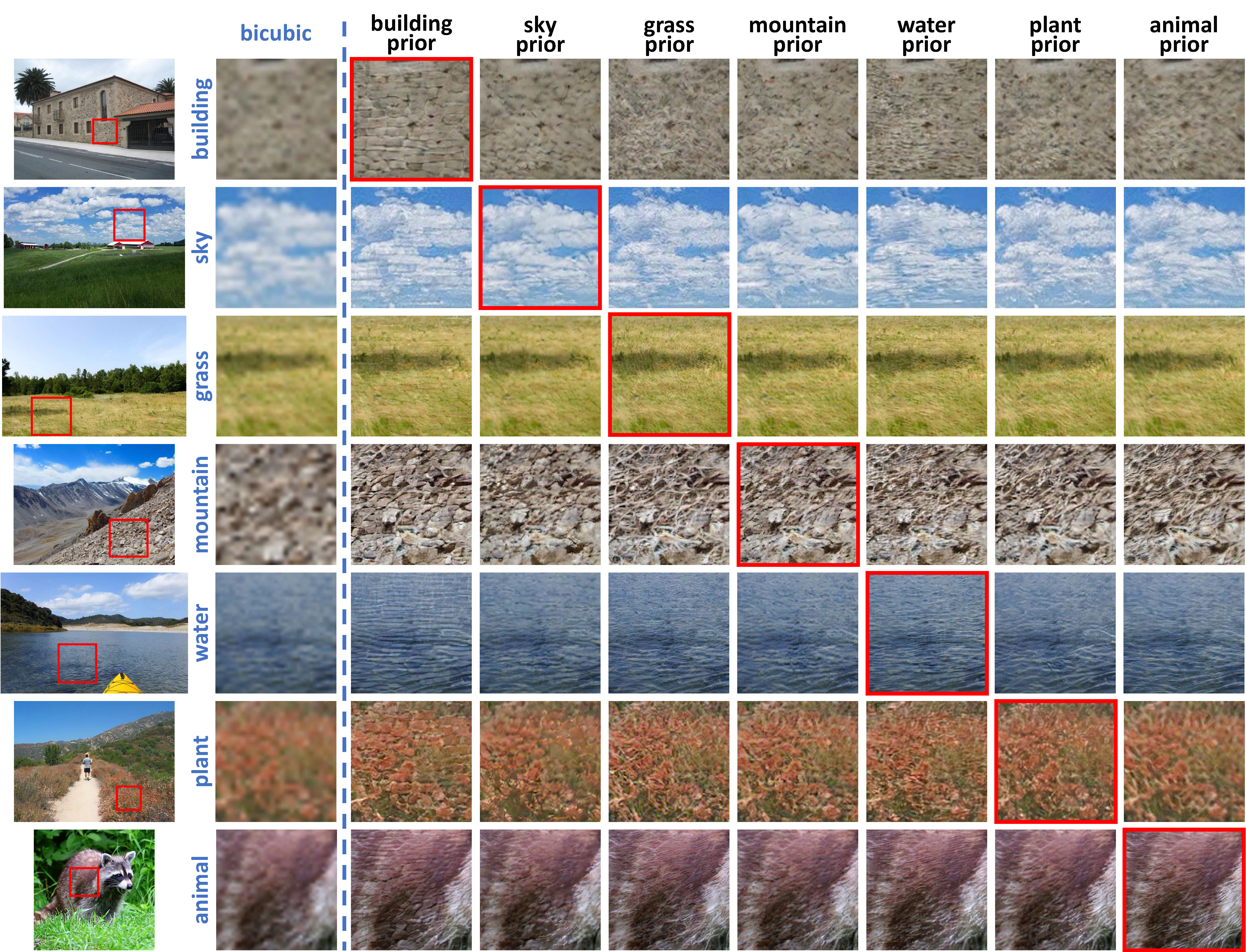

It is interesting to analyze the impact of different categorical priors by manually changing the probability maps to each category for a certain input. You can zoom in by hovering your mouse on the image.

We provide more visual comparisons with state-of-the-art methods on OST300.

By clicking the following image thumbnail, you can view larger images and switch them with further clicking left/right arrows.

Contact

If you have any question, please contact Xintao Wang at wx016@ie.cuhk.edu.hk