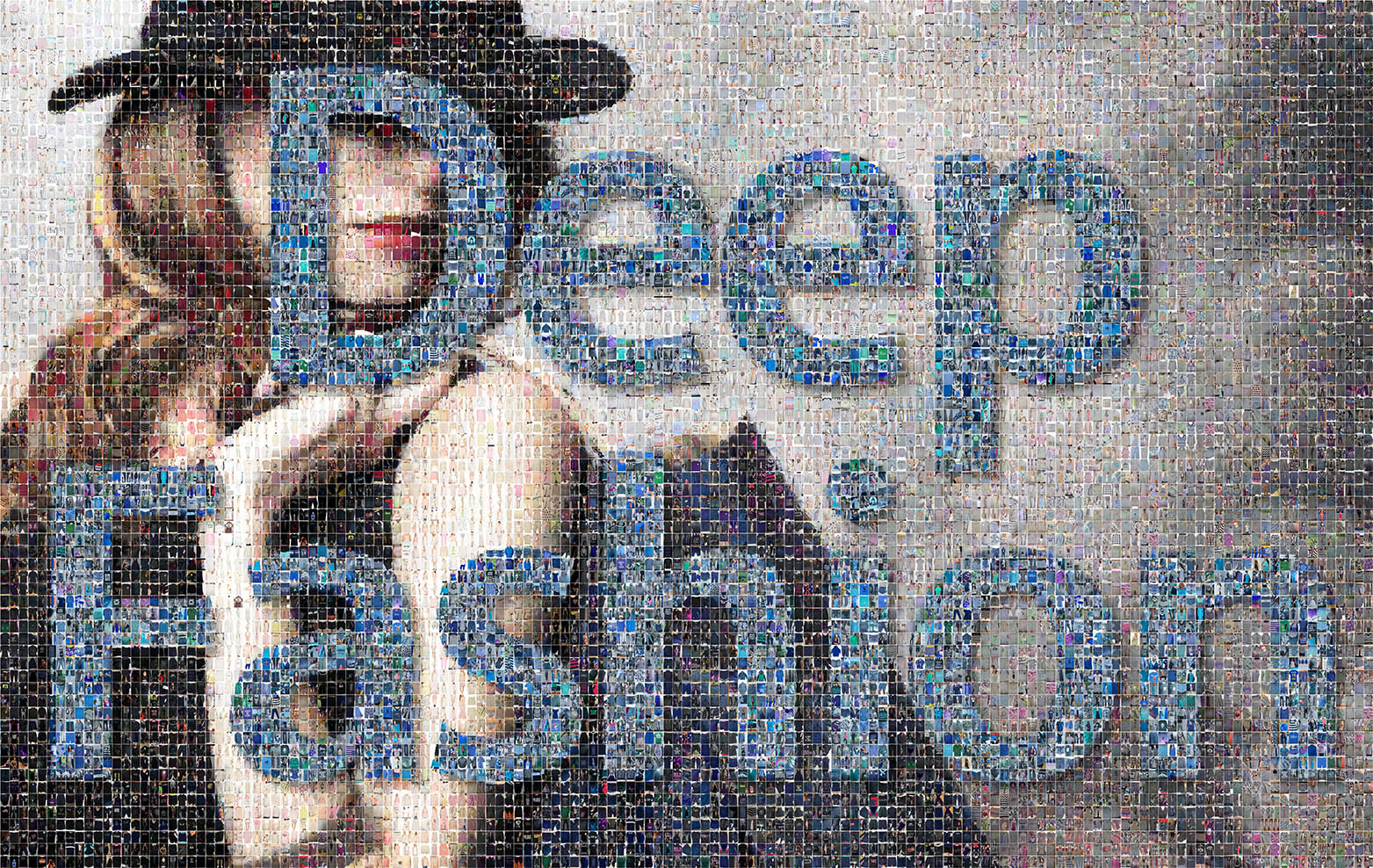

Large-scale Fashion (DeepFashion) Database

News

2022-06-01 We release the DeepFashion-MultiModal dataset with rich multi-modal

annotations, including manually annotated human parsing labels, manually annotated human keypoints,

manually annotated fine-grained labels and textual descriptions. ![]()

2020-05-04 Parsing mask annotations and dense pose annotations have been added to

“In-shop Clothes Retrieval Benchmark”. Fine-grained attribute annotations have been added to

“Category and Attribute Prediction Benchmark”. ![]()

2019-11-01 An open-source toolbox for visual fashion analysis, MMFashion, has been released. ![]()

2017-10-27 A new benchmark of Fashion Image Synthesis has been released.

2016-08-08 The “Category and Attribute Prediction Benchmark” has been released. You do *not* need password to unzip the images. To access the other three benchmarks, please read download instruction below.

2016-07-29 If Dropbox is not accessible, please download the dataset using Google Drive or Baidu Drive.

2016-07-27 The benchmark of “Fashion Landmark Detection” has been released.

2016-07-18 The “In-shop Clothes Retrieval Benchmark” and “Consumer-to-shop Clothes Retrieval Benchmark” have been released. Please read the Download Instructions. The other benchmarks will be released soon.

Descriptions

We contribute DeepFashion database, a large-scale clothes database, which has several appealing properties:

-

First, DeepFashion contains over 800,000 diverse fashion images ranging from well-posed shop images to unconstrained consumer photos, constituting the largest visual fashion analysis database.

-

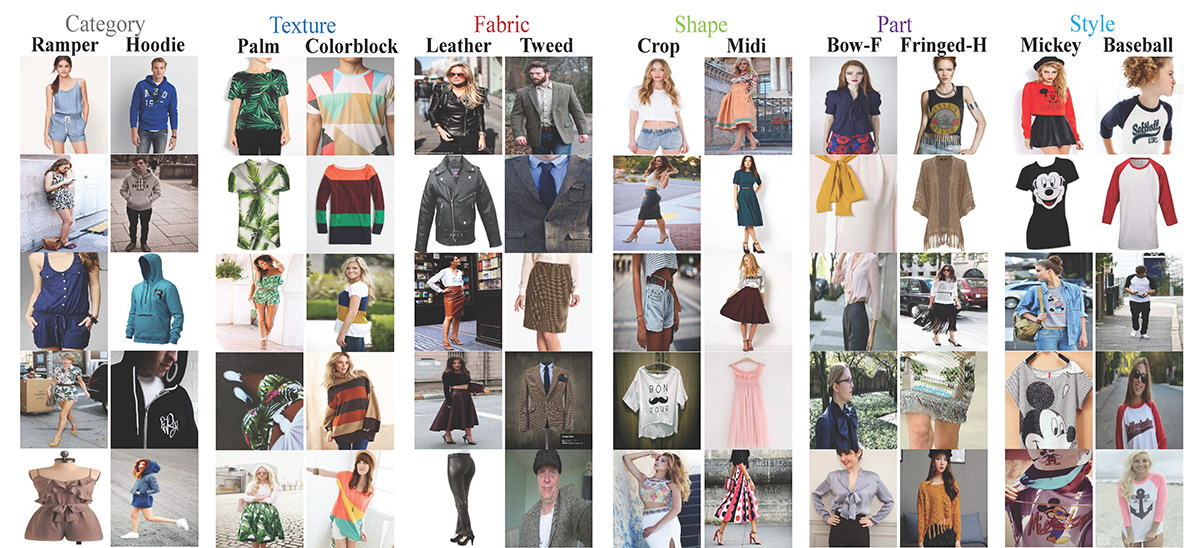

Second, DeepFashion is annotated with rich information of clothing items. Each image in this dataset is labeled with 50 categories, 1,000 descriptive attributes, bounding box and clothing landmarks.

-

Third, DeepFashion contains over 300,000 cross-pose/cross-domain image pairs.

Please read Download Instructions below to access the dataset.

Benchmarks

For more details of the benchmarks, please refer to the paper, DeepFashion: Powering Robust Clothes Recognition and Retrieval with Rich Annotations, CVPR 2016.

1. Category and Attribute Prediction Benchmark: [Download Page]

Category and Attribute Prediction Benchmark

2. In-shop Clothes Retrieval Benchmark: [Download Page]

In-shop Clothes Retrieval Benchmark

3. Consumer-to-shop Clothes Retrieval Benchmark: [Download Page]

Consumer-to-shop Clothes Retrieval Benchmark

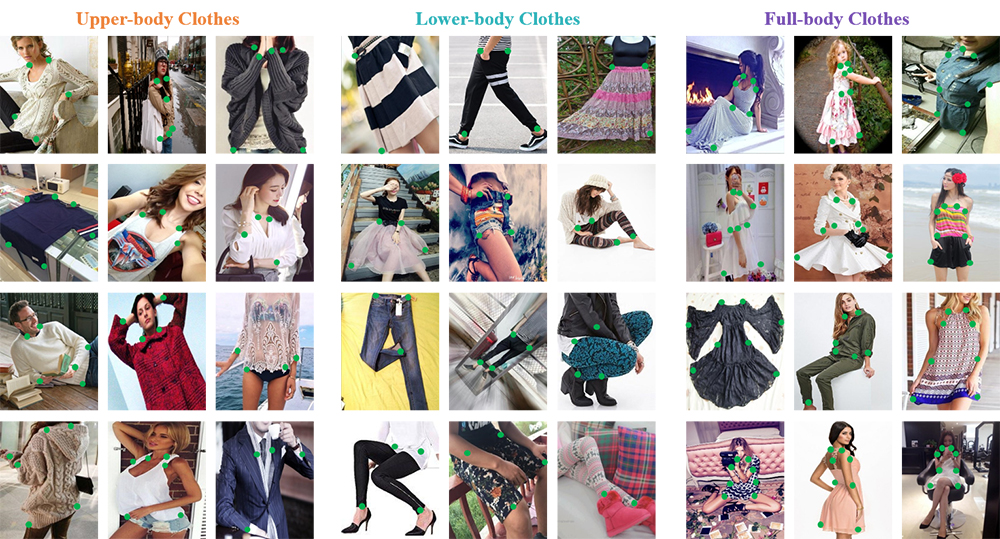

4. Fashion Landmark Detection Benchmark: [Download Page]

Fashion Landmark Detection Benchmark

If the above links are not accessible, you could download the dataset using Google Drive or Baidu Drive.

Agreement

- The DeepFashion is available for non-commercial research purposes only.

- All images of the DeepFashion are obtained from the Internet which are not property of MMLAB, The Chinese University of Hong Kong. The MMLAB is not responsible for the content nor the meaning of these images.

- You agree not to reproduce, duplicate, copy, sell, trade, resell or exploit for any commercial purposes, any portion of the images and any portion of derived data.

- You agree not to further copy, publish or distribute any portion of the DeepFashion. Except, for internal use at a single site within the same organization it is allowed to make copies of the dataset.

- The MMLAB reserves the right to terminate your access to the DeepFashion at any time.

Download Instructions

- Some image data are encrypted to prevent unauthorized access. Please download the DeepFashion dataset Release

Agreement.

- Read it carefully, complete and sign it appropriately. This is an example.

- Please send the completed form to Ziwei Liu (zwliu.hust@gmail.com) and cc to Ping Luo (pluo(at)ie.cuhk.edu.hk) using institutional email address. The email Subject Title is "DeepFashion Agreement". We will verify your request and contact you with the passwords to unzip the image data.

Citation

@inproceedings{liuLQWTcvpr16DeepFashion,

author = {Liu, Ziwei and Luo, Ping and Qiu, Shi and Wang, Xiaogang and Tang, Xiaoou},

title = {DeepFashion: Powering Robust Clothes Recognition and Retrieval with Rich Annotations},

booktitle = {Proceedings of IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2016}

}