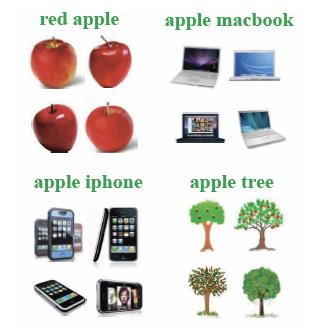

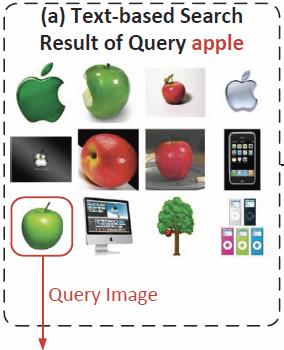

Web-scale image search engines mostly rely on surrounding text features. It is difficult for them to interpret users’ search intention only by query keywords and this leads to ambiguous and noisy search results which are far from satisfactory. Image re-ranking, as an effective way to improve the results of web-based image search, has been adopted by current commercial search engines such as Bing and Google. Given a query keyword, a pool of images are first retrieved based on textual information. By asking the user to select a query image from the pool, the remaining images are re-ranked based on their visual similarities with the query image. We have developed techniques that are able to provide satisfactory re-ranking results with minimum user effort. Our methods explore two directoins: better capturing user intention and better model the semantic structure of re-ranking image pool. Both directoins have gained significant improvement for image re-ranking.

Web Image Search and Re-ranking

Web Image Re-ranking Using Query-Specific Semantic Signatures

We propose a novel image re-ranking framework, which automatically offline learns

different semantic spaces for different query keywords. The visual features of images

are projected into their related semantic spaces to get semantic signatures. At the online stage,

images are re-ranked by comparing their semantic signatures obtained from the semantic space

specified by the query keyword. The proposed query-specific semantic signatures significantly

improve both the accuracy and efficiency of image re-ranking. The original visual features of thousands of

dimensions can be projected to the semantic signatures as short as 25 dimensions. Experimental

results show that 25%~40% relative improvement has been achieved on re-ranking precisions compared

with the state-of-the-art methods.

X. Wang, S. Qiu, K. Liu, and X. Tang, "Web Image Re-ranking Using Query-Specific Semantic Signatures,"

accepted to IEEE Transactions on Pattern Analysis and Machine Intelligence (PAMI).

PDF Project Page

X. Wang, K. Liu, and X. Tang, "Query-Specific Visual Semantic Spaces for Web Image Re-ranking,"

in Proceedings of IEEE Computer Society Conference on Computer Vision and Patter Recognition (CVPR) 2011.

PDF

IntentSearch: Capturing User Intention for One-Click Internet Image Search

Web-scale image search engines (e.g., Google image search, Bing image search) mostly rely on

surrounding text features. It is difficult for them to interpret users’ search intention only

by query keywords and this leads to ambiguous and noisy search results which are far from

satisfactory. It is important to use visual information in order to solve the ambiguity in

text-based image retrieval. We propose a novel Internet image search approach.

It only requires the user to click on one query image with minimum effort and images from a

pool retrieved by text-based search are reranked based on both visual and textual content.

X. Tang, K. Liu, J. Cui, F. Wen, and X. Wang, "IntentSearch:Capturing User Intention for One-Click Internet Image Search," IEEE Transactions on Pattern Analysis and Machine Intelligence (PAMI), Vol. 34, pp. 1342-1353, 2012.

PDF