Introduction

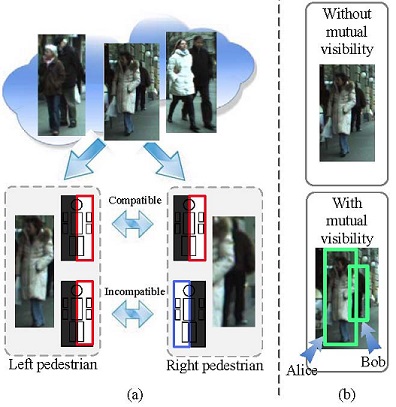

Pedestrians with overlaps are difficult to detect, however, we observe that these pedestrians have useful mutual visibility relationship information. When pedestrians are found to overlap in the image region, there are two types of mutual visibility relationships among their parts:

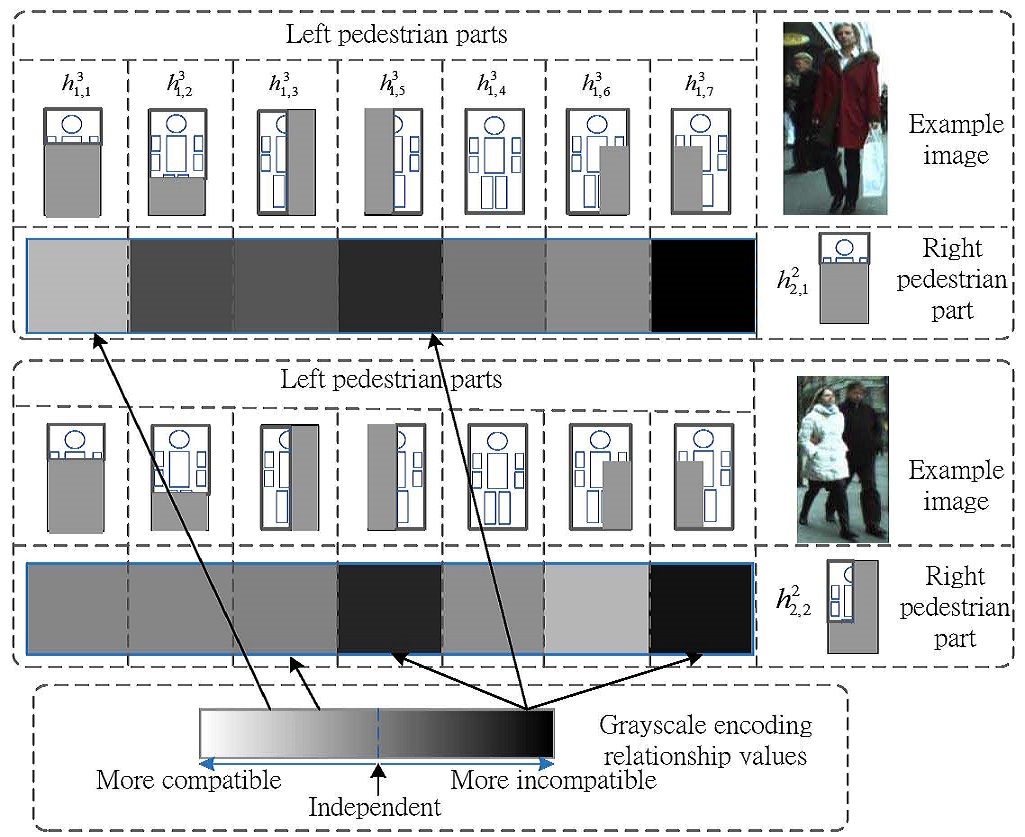

- Compatible relationship. It means that the observation of one part is a positive indication of the other part. There are two parts, i.e. left-half part and right-half part, for each pedestrian in above figure.In the figure above, given the prior knowledge that there are two pedestrian co-existing side by side, the right-half part of the left pedestrian is compatible with the right-half part of the right pedestrian because these two parts often co-exist in positive training examples. The compatible relationship can be used for increasing the visibility confidence of mutually compatible pedestrian parts. Take the above figure (b) as an example, if a pedestrian detector detects both Alice1 on the left and Bob on the right with high false positive rate, then the visibility confidence of Alice’s right-half part increases when Bob’s right-half part is found to be visible. And the detection confidence of Alice correspondingly increases. In this example, the compatible relationship helps to detect Alice

- Incompatible relationship. It means that the occlusion of one part indicates the visibility of the other part, and vice versa. For the example in the above figure, Alice and Bob have so strong overlap that one occludes the other. In this case, Alice’s right-half part and Bob’s left-half part are incompatible because they shall not be visible simultaneously. If a pedestrian detector detects both Alice and Bob with high false positive rate in the above figure, then the visibility confidence of Alice’s right-half part increases when Bob’s left-half part is found to be invisible. And Alice’s detection confidence is correspondingly increased. Therefore, incompatible relationship helps to detect Alice in this example.

Contribution Highlights

The main contribution is to jointly estimate the visibility statuses of multiple pedestrians and recognize co-existing pedestrians via a mutual visibility deep model. Overlapping parts of co-existing pedestrians are placed at multiple layers in this deep model. With this deep model,- overlapping parts at different layers verify the visibility of each other for multiple times.

- the complex probabilistic connections across layers are modeled with good efficiency on both learning and inference.

Citation

If you use our codes or dataset, please cite the following papers:

- W. Ouyang, X. Zeng and X. Wang. Modeling Mutual Visibility Relationship with a Deep Model in Pedestrian Detection. In CVPR, 2013. PDF

Images

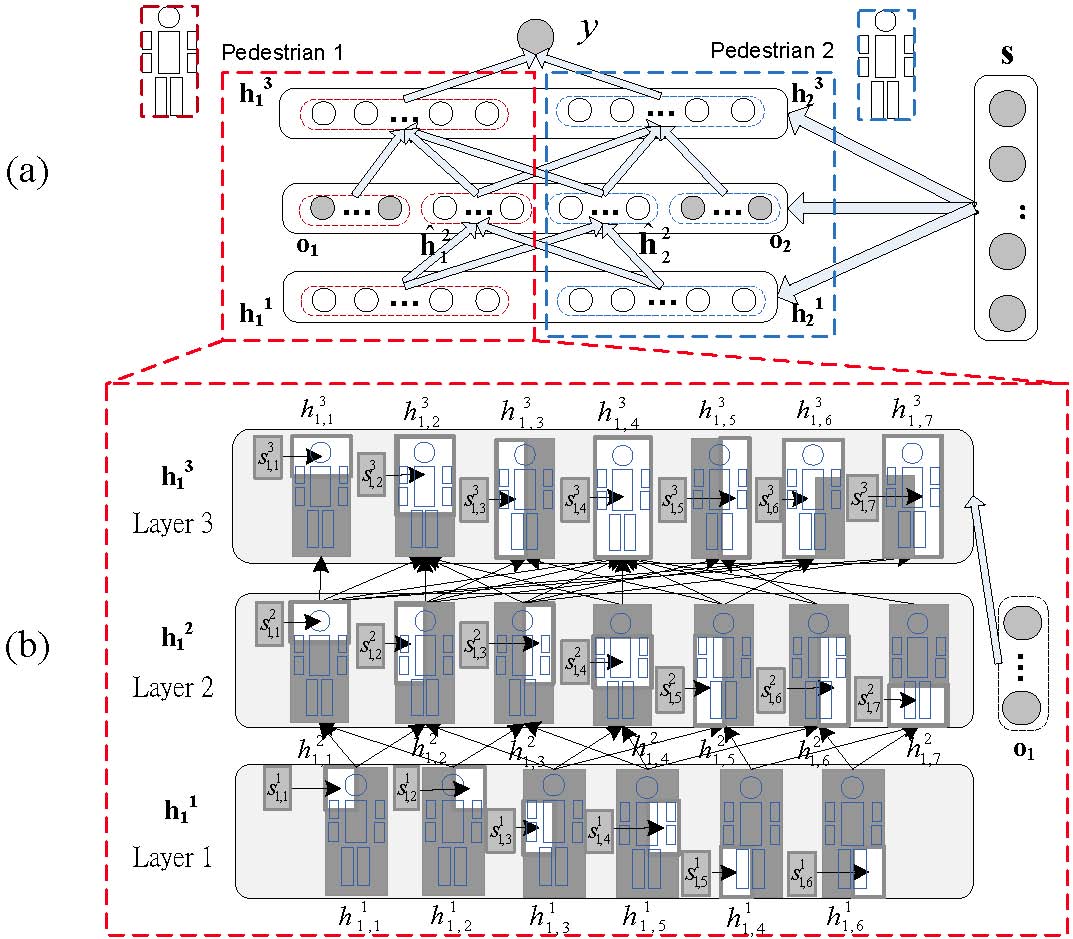

(a) The mutual visibility deep model used for inference and fine tuning parameters

(b) the detailed connection and parts model for pedestrian 1.

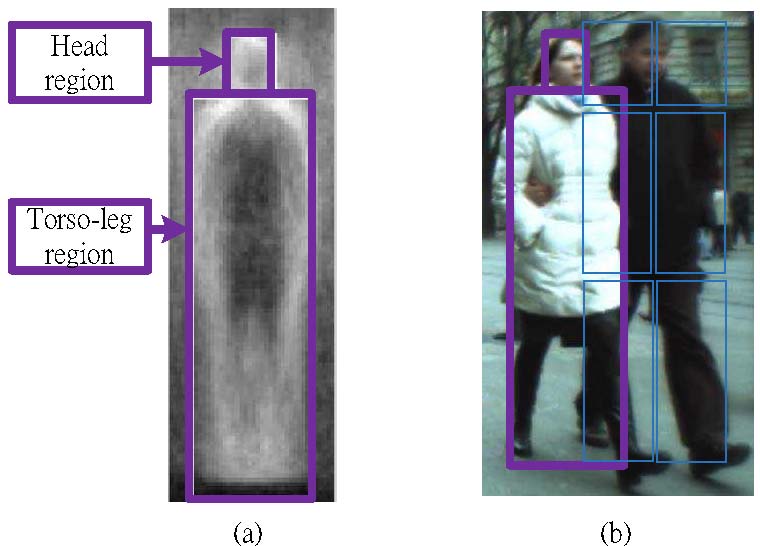

(a) Two rectangular regions used for approximating the pedestrian region

(b) an example with left-head-shoulder, lefttorso and left-leg overlapping with the pedestrian regions of the left person.

Examples of correlation between different parts learned from the deep model

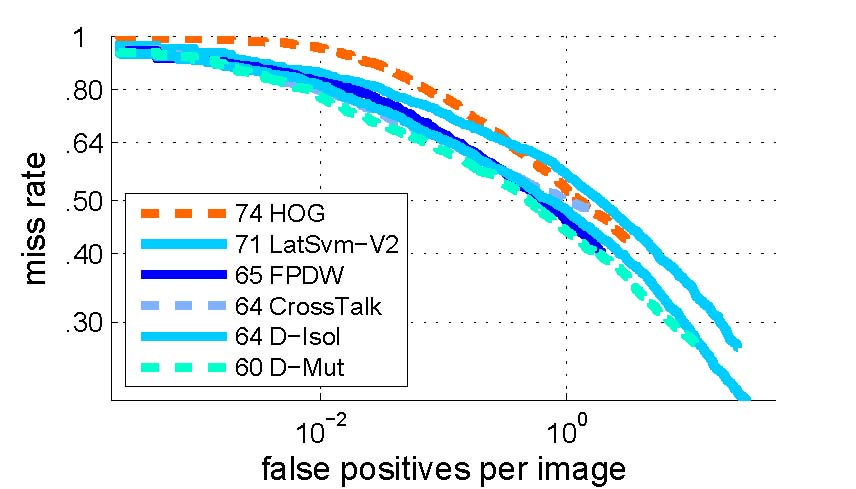

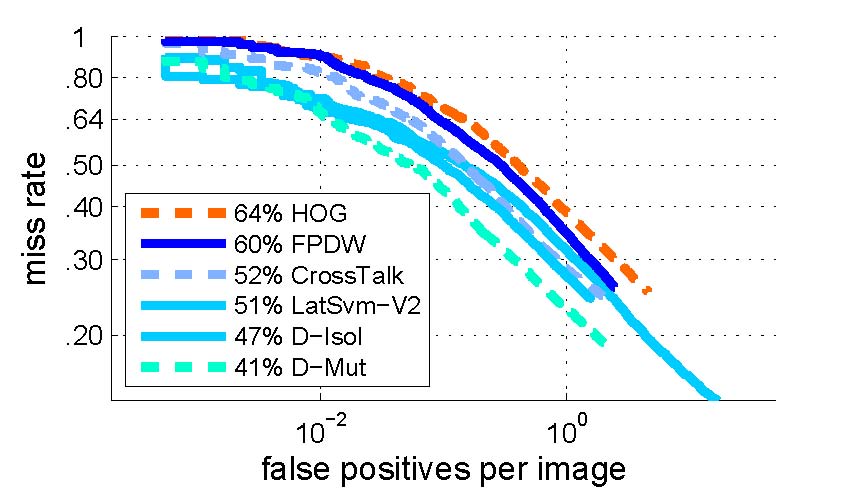

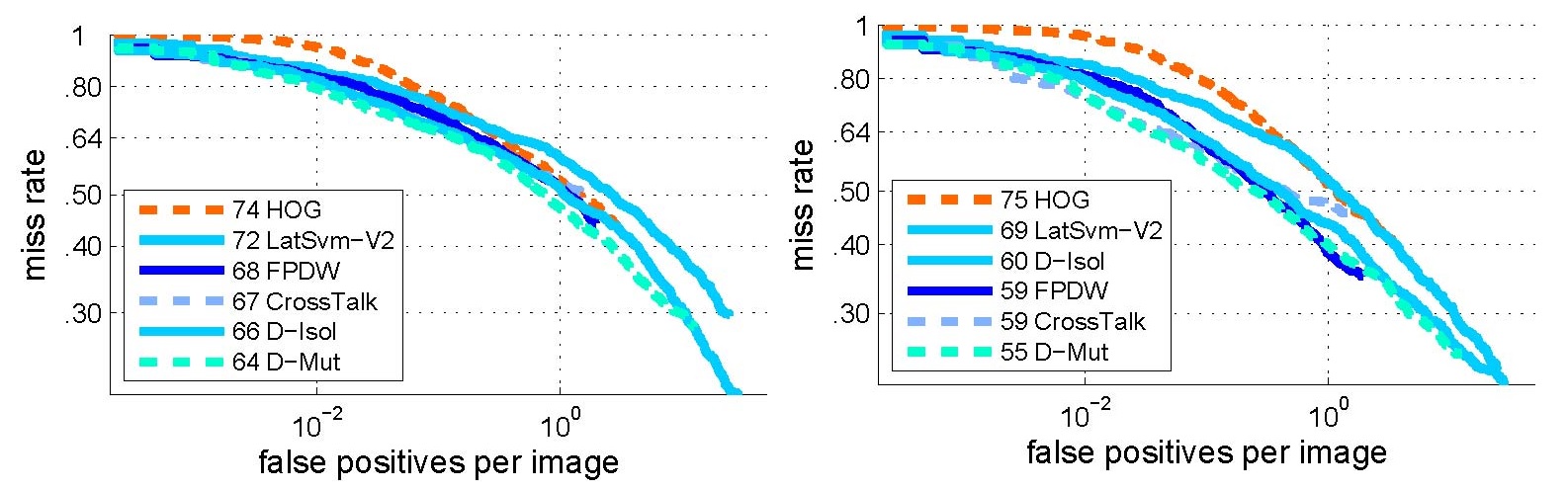

Experimental results on the Caltech-Train dataset for HOG, LatSVM-V2, FPDW, D-Isol and our mutual visibility approach, i.e. D-Mut

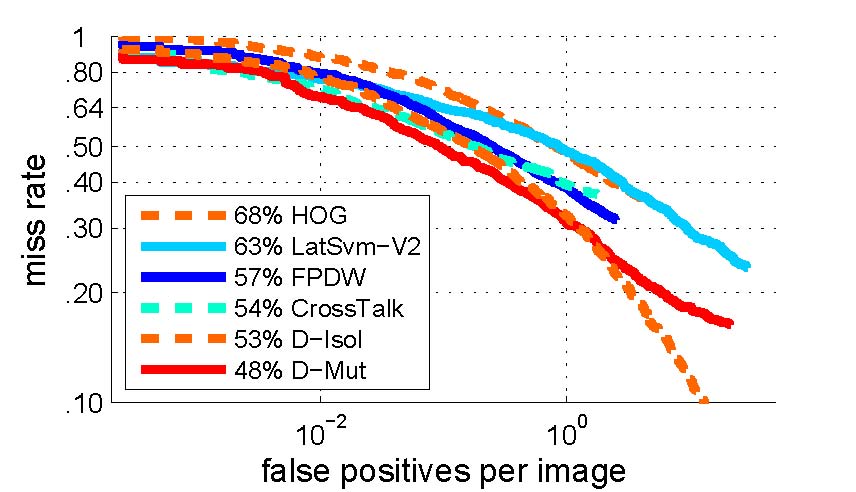

Experimental results on the ETH dataset for HOG, LatSVM-V2, FPDW, D-Isol and our mutual visibility approach, i.e. D-Mut

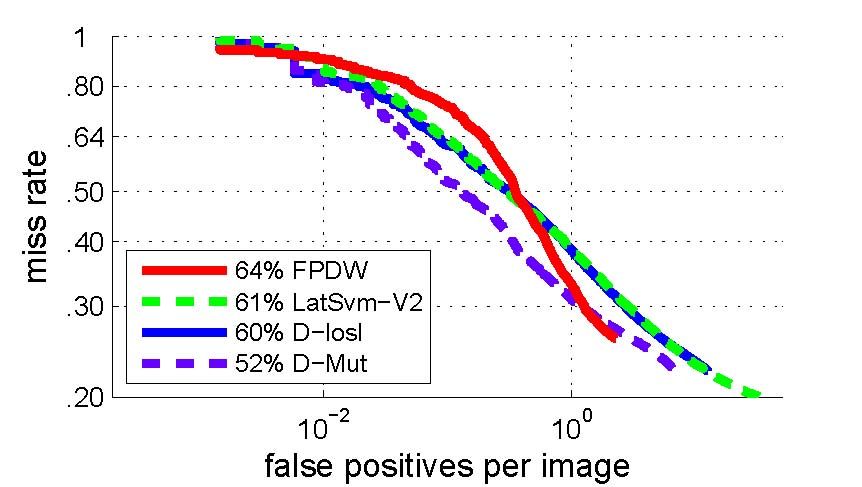

Experimental results on the CaltechTest dataset for HOG, LatSVM-V2, FPDW, D-Isol and our mutual visibility approach, i.e. D-Mut

Experimental results on the PETS2009 dataset for LatSVM-V2, FPDW, D-Isol and our mutual visibility approach, i.e. D-Mut

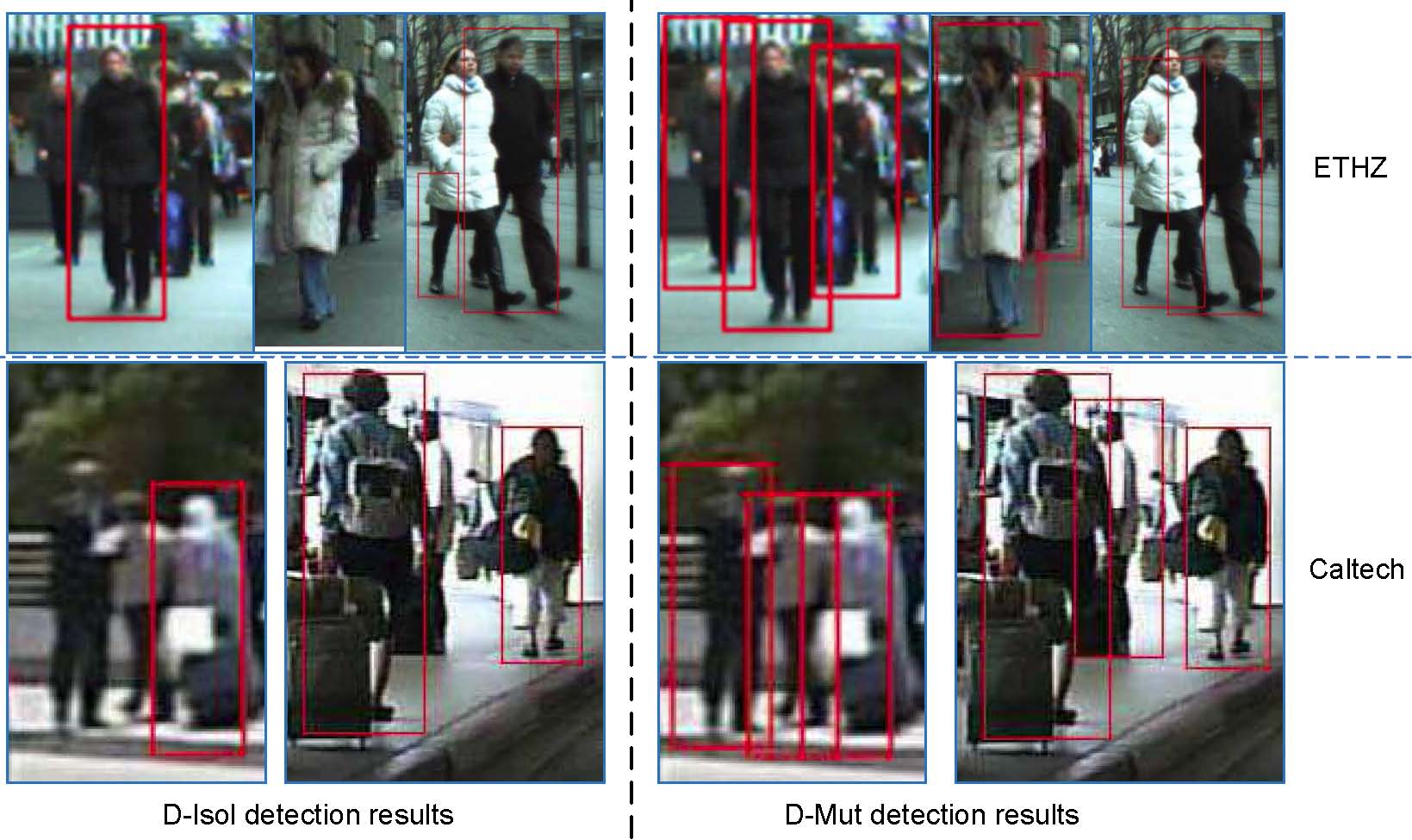

Detection results comparison of D-Isol and D-Mut on the Caltech-Train dataset and the ETH dataset. All results are obtained at 1 FPPI.

Experimental results on detecting Isolated pedestrians (left) and Overlapped pedestrians (right) on the Caltech-Train dataset.